最近更新

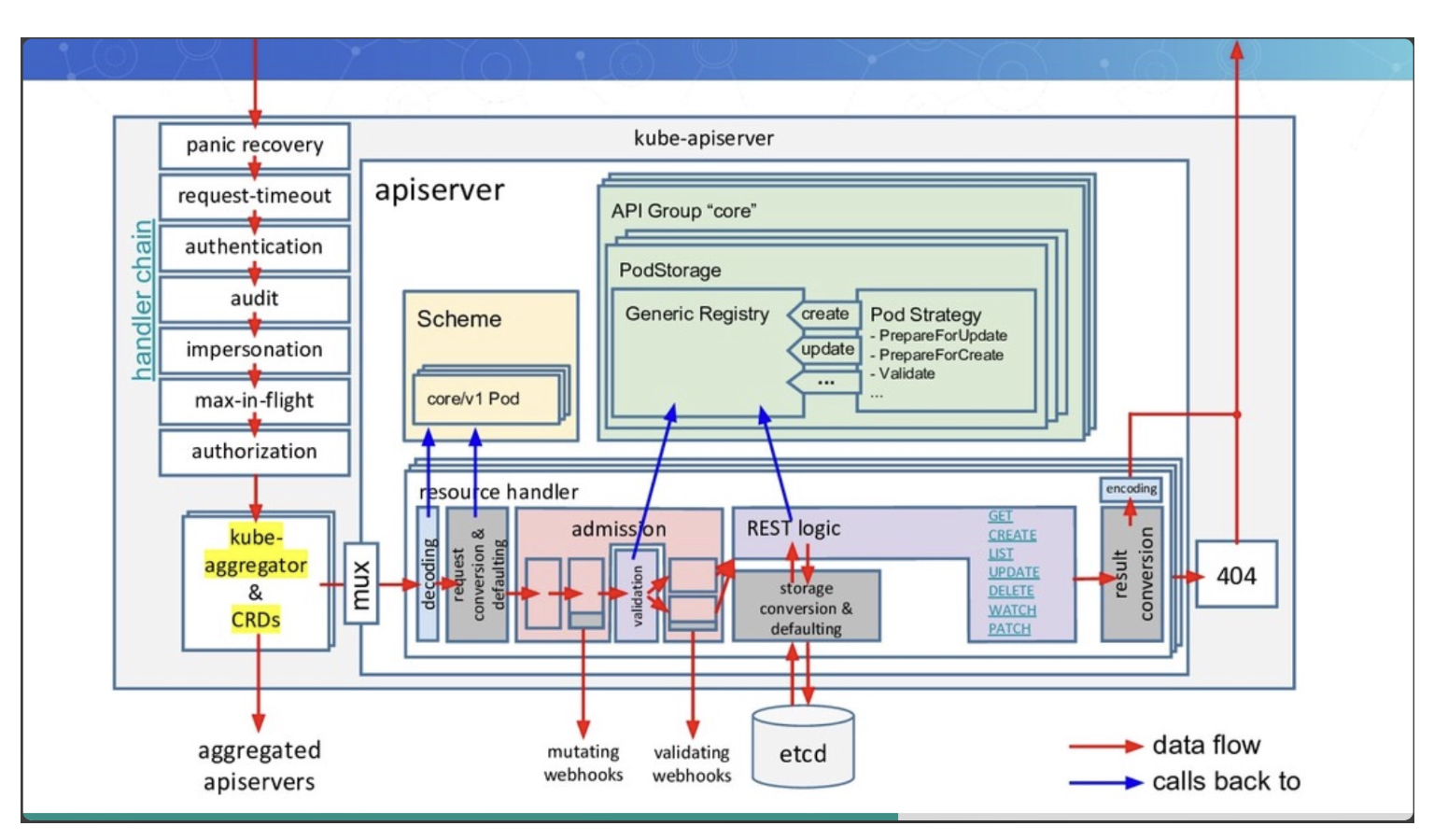

kubernetes

apiserver

container

contraller

- kubernetes源码分析之schedule

- kubernetes源码分析之replicaset

- kubernetes源码分析之deploy

- kubernetes源码分析之endpoint

- services原理

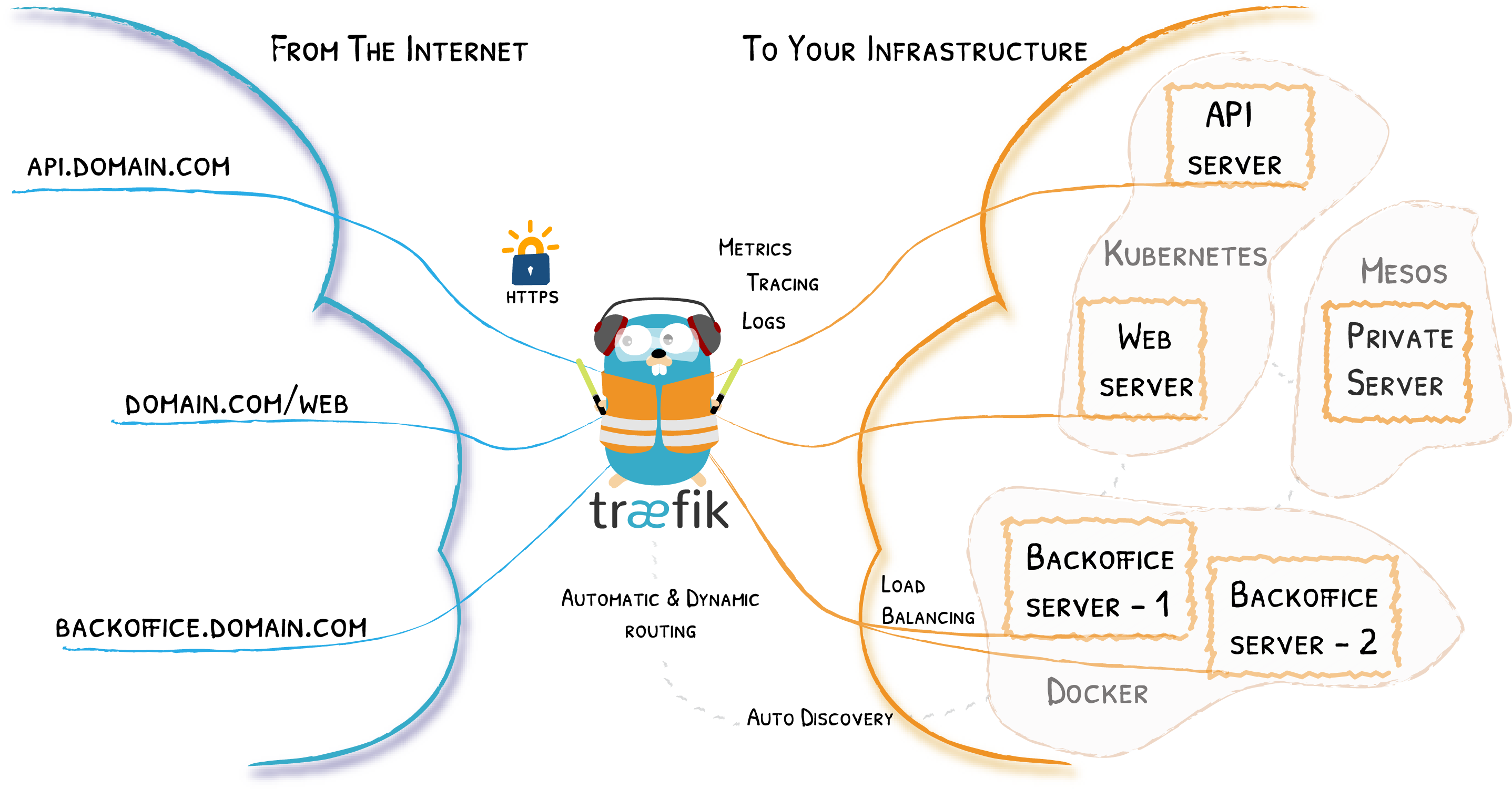

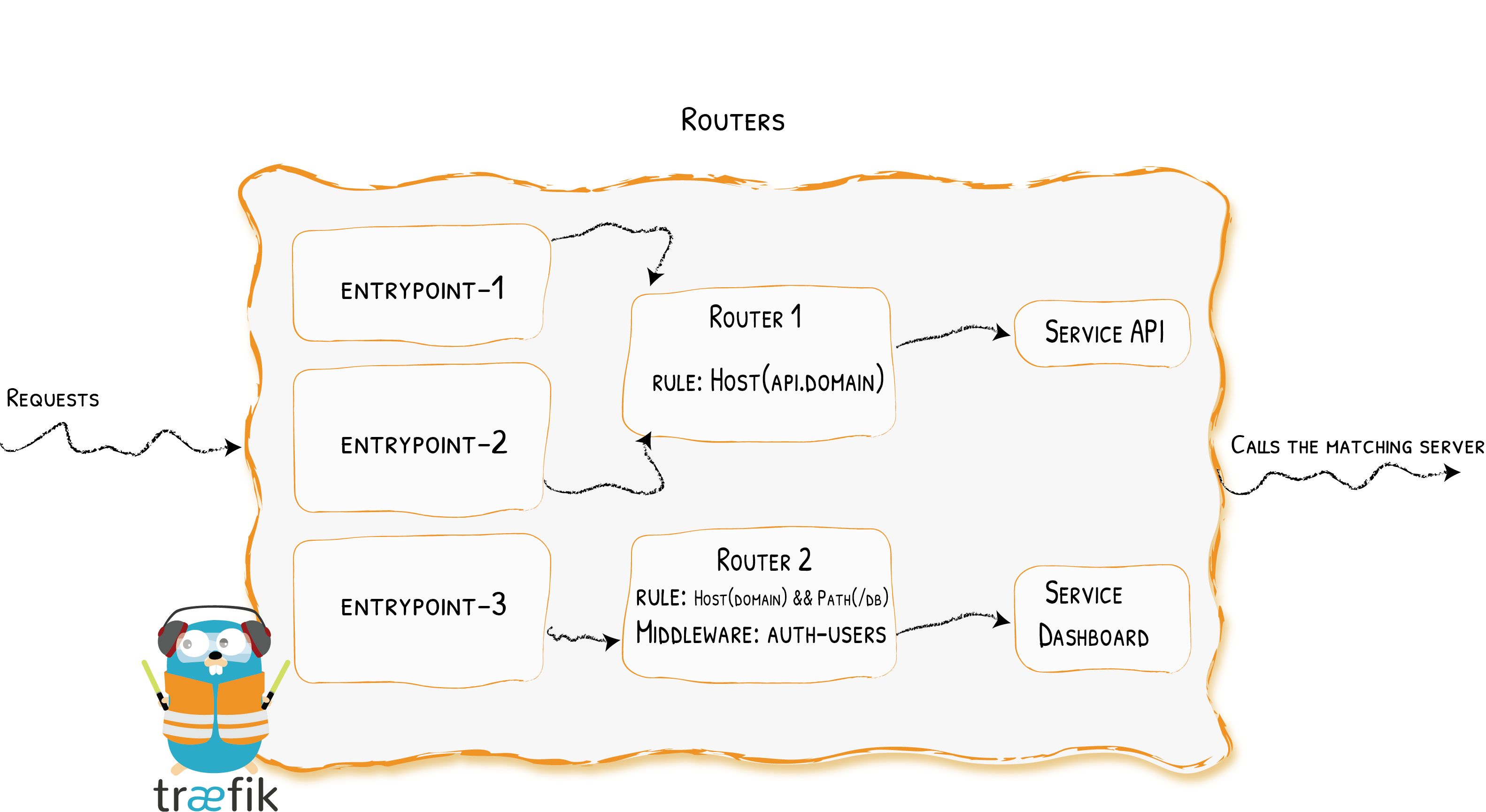

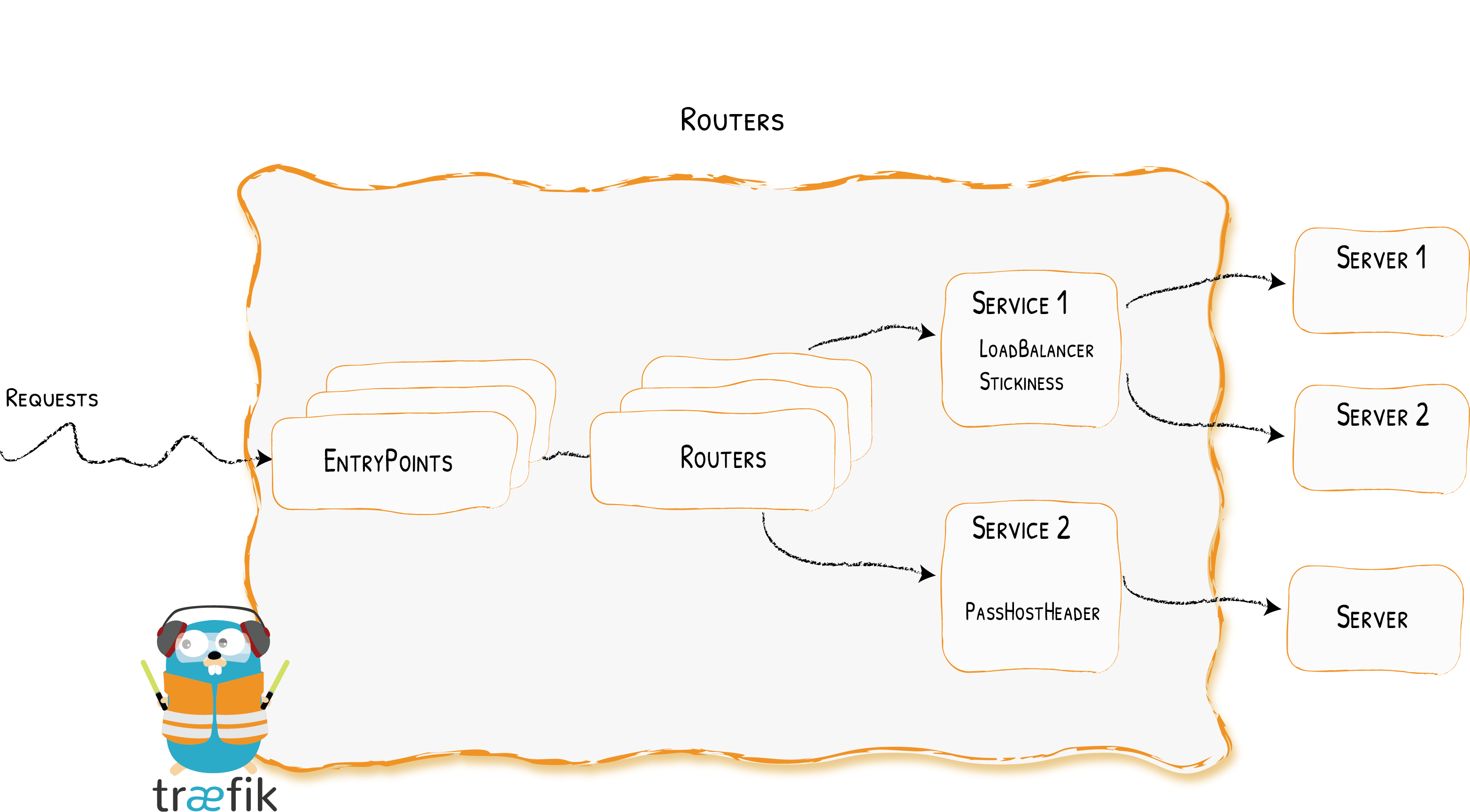

traefik

channel详解

chan须知

在golang中,chan用于多个go中的数据交互,分有buff和无buff,定义如下,无buf会阻塞发送端以及接收端,知道buf里数据被取走发送端才会解除阻塞

有buf则在buf满之前只阻塞接收端,下面我们取看看底层数据结构,以及各种情况go如何调度

make(chan int,1) //无buff

make(chan int,10) //有buff

我们先来找下chan在底层的定义,通过dlv找到创建chan的具体实现是runtime.makechan

//chan.go

package main

func main(){

_ = make(chan int,1)

}

#通过dlv找到创建chan go具体的代码

root@node1:~/work/go/demo# dlv debug chan.go

Type 'help' for list of commands.

(dlv) b main.main

Breakpoint 1 set at 0x460dc6 for main.main() ./chan.go:3

(dlv) c

> main.main() ./chan.go:3 (hits goroutine(1):1 total:1) (PC: 0x460dc6)

1: package main

2:

=> 3: func main(){

4: _ = make(chan int,1)

5: }

(dlv) disass

TEXT main.main(SB) /root/work/go/demo/chan.go

chan.go:3 0x460dc0 493b6610 cmp rsp, qword ptr [r14+0x10]

chan.go:3 0x460dc4 7629 jbe 0x460def

=> chan.go:3 0x460dc6* 4883ec18 sub rsp, 0x18

chan.go:3 0x460dca 48896c2410 mov qword ptr [rsp+0x10], rbp

chan.go:3 0x460dcf 488d6c2410 lea rbp, ptr [rsp+0x10]

chan.go:4 0x460dd4 488d0585440000 lea rax, ptr [rip+0x4485]

chan.go:4 0x460ddb bb01000000 mov ebx, 0x1

chan.go:4 0x460de0 e87b38faff call $runtime.makechan

chan.go:5 0x460de5 488b6c2410 mov rbp, qword ptr [rsp+0x10]

chan.go:5 0x460dea 4883c418 add rsp, 0x18

chan.go:5 0x460dee c3 ret

chan.go:3 0x460def e84cccffff call $runtime.morestack_noctxt

chan.go:3 0x460df4 ebca jmp $main.main

#通过不断输入si跳转到runtime.makechan后 找到文件位置如下

##> runtime.makechan() /usr/local/go/src/runtime/chan.go:72 (PC: 0x404660)

下面我们一起来看看具体的数据结构

channel 数据结构

//可以简单理解成环形buf, 具体结构看下图

type hchan struct {

//整个chan <-放了多少数据

qcount uint // total data in the queue

//总共可以放多少数据

dataqsiz uint // size of the circular queue

//存放数据的数组

buf unsafe.Pointer // points to an array of dataqsiz elements

//能够收发元素的大小

elemsize uint16

closed uint32

//make 里chan的类型

elemtype *_type // element type

//发送处理到的index

sendx uint // send index

//接收buf的index

recvx uint // receive index

//被阻塞的接收g列表,双向链表

recvq waitq // list of recv waiters

//被阻塞的发送g列表

sendq waitq // list of send waiters

// lock protects all fields in hchan, as well as several

// fields in sudogs blocked on this channel.

//

// Do not change another G's status while holding this lock

// (in particular, do not ready a G), as this can deadlock

// with stack shrinking.

lock mutex

}

这段数据结构还挺简单的,主要就是一个环形数组,我们继续看make初始化方法

//篇幅有限,删除掉了一堆校验

func makechan(t *chantype, size int) *hchan {

elem := t.elem

mem, overflow := math.MulUintptr(elem.size, uintptr(size))

var c *hchan

switch {

//mem为0,对应我们make(chan type,0)这种情况,仅分配一段内存

case mem == 0:

// Queue or element size is zero.

c = (*hchan)(mallocgc(hchanSize, nil, true))

// Race detector uses this location for synchronization.

c.buf = c.raceaddr()

//存储的类型不是指针类型,分配一段内存空间

//补充 elem.ptrdata对应是该结构指针截止的长度位置

case elem.ptrdata == 0:

// Elements do not contain pointers.

// Allocate hchan and buf in one call.

c = (*hchan)(mallocgc(hchanSize+mem, nil, true))

c.buf = add(unsafe.Pointer(c), hchanSize)

//默认分配内存

default:

// Elements contain pointers.

c = new(hchan)

c.buf = mallocgc(mem, elem, true)

}

c.elemsize = uint16(elem.size)

c.elemtype = elem

c.dataqsiz = uint(size)

lockInit(&c.lock, lockRankHchan)

return c

}

初始分配就是分配好hchan的结构体,接下来我们看发送和接收数据。

channel 发送数据

在上文中的代码我们改成如下结果,根据dlv分析得到执行函数未runtime.chansend1,下面我们看看具体的代码,由于代码比较复杂,我们先看流程图,后根据每种情况

看具体代码

//chan.go

package main

func main(){

chan1 := make(chan int,10)

chan1 <- 1

chan1 <- 2

}

#寻找发送数据代码位置

> main.main() ./chan.go:5 (PC: 0x458591)

Warning: debugging optimized function

chan.go:4 0x458574 488d05654b0000 lea rax, ptr [rip+0x4b65]

chan.go:4 0x45857b bb0a000000 mov ebx, 0xa

chan.go:4 0x458580 e8fbb6faff call $runtime.makechan

chan.go:4 0x458585 4889442410 mov qword ptr [rsp+0x10], rax

chan.go:5 0x45858a 488d1d2f410200 lea rbx, ptr [rip+0x2412f]

=> chan.go:5 0x458591 e8cab8faff call $runtime.chansend1

chan.go:6 0x458596 488b442410 mov rax, qword ptr [rsp+0x10]

chan.go:6 0x45859b 488d1d26410200 lea rbx, ptr [rip+0x24126]

chan.go:6 0x4585a2 e8b9b8faff call $runtime.chansend1

chan.go:7 0x4585a7 488b6c2418 mov rbp, qword ptr [rsp+0x18]

chan.go:7 0x4585ac 4883c420 add rsp, 0x20

# runtime.chansend1() /usr/local/go/src/runtime/chan.go:144 (PC: 0x403e60)

# chan 发送数据流程图

+-----------+

| put data |

+-----------+

^

| buf没满

|

+--------------+ chan not nil +-------------------+ +------------+ not sudog +-----------+ buf满了 +-----------------------+ +---------------------+

| channel | --------------> | non blocking | --> | lock | -----------> | chan | -------> | pack sudog && enqueue | --> | go park && schedule |

+--------------+ +-------------------+ +------------+ +-----------+ +-----------------------+ +---------------------+

| | |

| chan is nil | is close && full | get sudog

v v v

+--------------+ +-------------------+ +------------+ +-----------+ +-----------------------+

| gopark | | return false | | send | -----------> | copy data | -------> | goready |

+--------------+ +-------------------+ +------------+ +-----------+ +-----------------------+

由上图得知,我们chan <- i会遇到几种情况

- 如果recvq上存在被阻塞的g,则会直接讲数据发给当前g,设置成下一个运行的g

- 如果chan buf没满,直接将数据存到buf中

- 如果都不满足,创建一个sudog,并将其加入chan的sendq队列,当前g陷入阻塞等待chan的数据被接收 下面我们再结合代码看下具体的实现

首先判断chan为空,永久阻塞,

func chansend(c *hchan, ep unsafe.Pointer, block bool, callerpc uintptr) bool {

//c为空且为阻塞,park住等待接收端接收

if c == nil {

if !block {

return false

}

gopark(nil, nil, waitReasonChanSendNilChan, traceEvGoStop, 2)

throw("unreachable")

}

然后判断非阻塞且未被关闭且buf满了,返回false,然后加锁

if !block && c.closed == 0 && full(c) {

return false

}

lock(&c.lock)

然后判断hchan.waitq上是否有等待的g,也就是代码里<-chan的g,如果拿到了g,则执行send

if sg := c.recvq.dequeue(); sg != nil {

// Found a waiting receiver. We pass the value we want to send

// directly to the receiver, bypassing the channel buffer (if any).

send(c, sg, ep, func() { unlock(&c.lock) }, 3)

return true

}

dequeue()是拿g的逻辑,waitq是一个sudog的双向链表,存了first,last的sudog,sudog我们在runtime系列里会有一节详解,

func (q *waitq) dequeue() *sudog {

for {

sgp := q.first

if sgp == nil {

return nil

}

y := sgp.next

if y == nil {

q.first = nil

q.last = nil

} else {

y.prev = nil

q.first = y

sgp.next = nil // mark as removed (see dequeueSudoG)

}

if sgp.isSelect && !sgp.g.selectDone.CompareAndSwap(0, 1) {

continue

}

return sgp

}

}

下面我们再来看看send的逻辑,sendDirect将chan里的数据拷贝给g,同时通过goready唤醒对应取数据的g

func send(c *hchan, sg *sudog, ep unsafe.Pointer, unlockf func(), skip int) {

if sg.elem != nil {

sendDirect(c.elemtype, sg, ep)

sg.elem = nil

}

gp := sg.g

unlockf()

gp.param = unsafe.Pointer(sg)

sg.success = true

if sg.releasetime != 0 {

sg.releasetime = cputicks()

}

goready(gp, skip+1)

}

当waitq上没有g在等待的时候,如果buf没满,将数据放到buf上继续

if c.qcount < c.dataqsiz {

// Space is available in the channel buffer. Enqueue the element to send.

qp := chanbuf(c, c.sendx)

if raceenabled {

racenotify(c, c.sendx, nil)

}

typedmemmove(c.elemtype, qp, ep)

c.sendx++

if c.sendx == c.dataqsiz {

c.sendx = 0

}

c.qcount++

unlock(&c.lock)

return true

}

当buf满了,阻塞当前这个发送的g,并通过acquireSudog获取一个sudog绑定上当前的g,放入sendq的g队列中,同时gopack,等待调度重新唤醒,直到接收了数据后,

将sudog通过releaseSudog释放回池

// Block on the channel. Some receiver will complete our operation for us.

gp := getg()

//获取sudog

mysg := acquireSudog()

mysg.releasetime = 0

if t0 != 0 {

mysg.releasetime = -1

}

// No stack splits between assigning elem and enqueuing mysg

// on gp.waiting where copystack can find it.

mysg.elem = ep

mysg.waitlink = nil

mysg.g = gp

mysg.isSelect = false

mysg.c = c

gp.waiting = mysg

gp.param = nil

c.sendq.enqueue(mysg)

// Signal to anyone trying to shrink our stack that we're about

// to park on a channel. The window between when this G's status

// changes and when we set gp.activeStackChans is not safe for

// stack shrinking.

gp.parkingOnChan.Store(true)

gopark(chanparkcommit, unsafe.Pointer(&c.lock), waitReasonChanSend, traceEvGoBlockSend, 2)

// Ensure the value being sent is kept alive until the

// receiver copies it out. The sudog has a pointer to the

// stack object, but sudogs aren't considered as roots of the

// stack tracer.

KeepAlive(ep)

// someone woke us up.

if mysg != gp.waiting {

throw("G waiting list is corrupted")

}

gp.waiting = nil

gp.activeStackChans = false

closed := !mysg.success

gp.param = nil

if mysg.releasetime > 0 {

blockevent(mysg.releasetime-t0, 2)

}

mysg.c = nil

releaseSudog(mysg)

if closed {

if c.closed == 0 {

throw("chansend: spurious wakeup")

}

panic(plainError("send on closed channel"))

}

return true

chan接收数据

我们还是通过dlv找到接收数据具体调用为 CALL runtime.chanrecv1(SB),下面我们来看看具体流程,流程图如下

+------------------+

| recv |

+------------------+

block ^

+-----------------------------------+ | sendq.dequeue()

| v |

+----------------+ chan not nil +--------+ not block && not recv +------+ +--------------------+ +------------------+ buf为空 +-----------------------+ +-------------------+

| channelrecv | --------------> | block? | -----------------------> | lock | --> | chan close? | --> | not close | -------> | pack sudog && enqueue | --> | go park && schedule |

+----------------+ +--------+ +------+ +--------------------+ +------------------+ +-----------------------+ +---------------------+

| | |

| chan is nil | close && qcount=0 | buf不为空

v v v

+----------------+ +--------------------+ +------------------+

| gopark forever | | return | | copy value |

+----------------+ +--------------------+ +------------------+

下面让我们来看看具体代码,与发送重复的代码就省略了

先加锁,如果chan已经被关闭了且没有多余的数据了,直接返回,未关闭则先从sendq上取一个sudog,上文我们提到满了的时候则会有g通过sudog被gopark在sendq上

所以优先查看是否有被gopark的发送sudog,在recv方法里去ready,recv主要是唤醒发送的发送sudog,跟上文的send方法类似

lock(&c.lock)

if c.closed != 0 {

if c.qcount == 0 {

if raceenabled {

raceacquire(c.raceaddr())

}

unlock(&c.lock)

if ep != nil {

typedmemclr(c.elemtype, ep)

}

return true, false

}

} else {

if sg := c.sendq.dequeue(); sg != nil {

recv(c, sg, ep, func() { unlock(&c.lock) }, 3)

return true, true

}

}

当没没有send的sudog时候,判断buf是不是为空,不为空则取出数据,buf为空的情况下如果不需要阻塞,直接解锁返回

if c.qcount > 0 {

// Receive directly from queue

qp := chanbuf(c, c.recvx)

if raceenabled {

racenotify(c, c.recvx, nil)

}

if ep != nil {

typedmemmove(c.elemtype, ep, qp)

}

typedmemclr(c.elemtype, qp)

c.recvx++

if c.recvx == c.dataqsiz {

c.recvx = 0

}

c.qcount--

unlock(&c.lock)

return true, true

}

if !block {

unlock(&c.lock)

return false, false

}

后面跟我们的send逻辑类似.获取一个sudog,添加进hchan.recvq里,等待chansend里逻辑唤醒,唤醒后通过releaseSudog放回sudog

// no sender available: block on this channel.

gp := getg()

mysg := acquireSudog()

mysg.releasetime = 0

if t0 != 0 {

mysg.releasetime = -1

}

// No stack splits between assigning elem and enqueuing mysg

// on gp.waiting where copystack can find it.

mysg.elem = ep

mysg.waitlink = nil

gp.waiting = mysg

mysg.g = gp

mysg.isSelect = false

mysg.c = c

gp.param = nil

c.recvq.enqueue(mysg)

// Signal to anyone trying to shrink our stack that we're about

// to park on a channel. The window between when this G's status

// changes and when we set gp.activeStackChans is not safe for

// stack shrinking.

gp.parkingOnChan.Store(true)

gopark(chanparkcommit, unsafe.Pointer(&c.lock), waitReasonChanReceive, traceEvGoBlockRecv, 2)

// someone woke us up

if mysg != gp.waiting {

throw("G waiting list is corrupted")

}

gp.waiting = nil

gp.activeStackChans = false

if mysg.releasetime > 0 {

blockevent(mysg.releasetime-t0, 2)

}

success := mysg.success

gp.param = nil

mysg.c = nil

releaseSudog(mysg)

return true, success

time

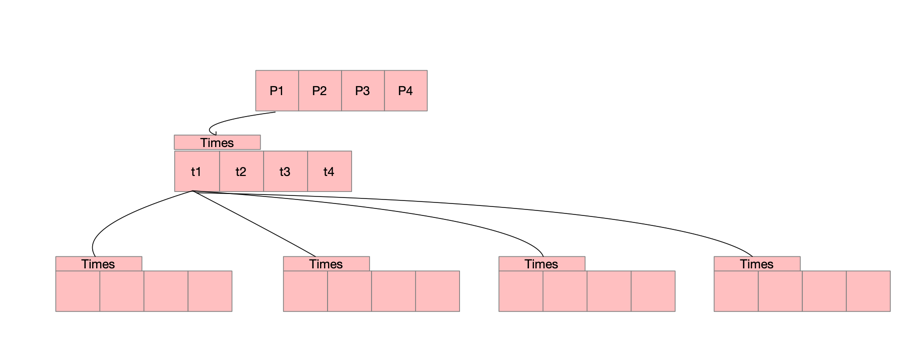

golang在1.14之前time改动了很多个版本,从全局到分片到网络轮询器,我们以最新的1.20.4讲解,有兴趣的同学可以看看对应的issues 我们还是先看图(//todo ASSIC不好画,后面有空在画,不影响阅读),再看具体代码

- 全局四叉堆:锁争用严重

- 分片四叉堆: 固定分割成64个hash减少锁力度,处理器和线程之间切换影响性能

- 网络轮询器版本: 所有的计时器以最小四叉堆存储在runtime.p 下面我们来看下网络轮询起中的版本

time 结构

time在1.14版本后,每个p上都挂了一个time的四叉小顶堆,结构如下,根据time上的when字段排序,具体调度触发time由schedule逻辑以及sysmon线程

结合netpoll去执行,可以看看runtime系列里schdule逻辑,以及netpoll文章

p里与time相关的字段如下

type p struct {

//堆顶最早一个执行时间

timer0When atomic.Int64

//timer修改最早时间

timerModifiedEarliest atomic.Int64

//操作timers数组的互斥锁

timersLock mutex

//四叉堆

timers []*timer

//四叉堆里timer的总数

numTimers atomic.Uint32

//标记删除的数量

deletedTimers atomic.Uint32

}

下面先简单了解下timer的具体结构,也就是四叉堆里的元素,方便后面具体调用过程

type timer struct {

//在堆上的time会保存对应p

pp puintptr

//计时器被实际唤醒时间

when int64

//周期性的定时任务两次被唤醒的间隔

period int64

//被唤醒的回调函数

f func(any, uintptr)

//回调函数传参

arg any

//回调函数的参数,在netpoll使用

seq uintptr

//处于特定状态,设置when字段

nextwhen int64

//状态

status atomic.Uint32

}

简单点来说,就是timer启动的时候,插入当前p上的四叉堆,同时p记录要执行的时间,//todo 我们在看看新建一个timer具体的流程与代码,使用dlv找到我们具体的代码为addtimer

添加定时器

// src/runtime/time.go

func addtimer(t *timer) {

t.status.Store(timerWaiting)

when := t.when

//获取当前g所在的m,会加锁处理,以及对应的p

mp := acquirem()

pp := getg().m.p.ptr()

//加锁

lock(&pp.timersLock)

//清理掉timer堆中删除的(也就是调用time.stop),以及过期的timer

cleantimers(pp)

//添加timer到堆上,主要调用堆排序算法插入,感兴趣可以自己去源码里看看

//同时将堆顶元素存到p的timer0When字段

doaddtimer(pp, t)

unlock(&pp.timersLock)

//when的值小于pollUntil时间,唤醒正在netpoll休眠的线程

wakeNetPoller(when)

releasem(mp)

}

我们根据上面代码了解了添加定时器,删除,修改调整定时器这里不在啰嗦了,就是操作四叉堆以及timer的状态机,我们重点描述下调度的触发逻辑

触发定时器

checkTimers是调度器触发timer运行的函数,主要在以下函数中触发,我们来看看具体触发逻辑

- schedule()

- findrunnable() 获取可执行的g

- stealWork()

- sysmon()

以上3个地方调用的都是checkTimers函数,该函数调整堆,删除一些比如过期的timer,调用time.stop的timer,以及返回下一个timer需要执行的时间,如果有

需要执行的则直接执行,下面看看具体代码

// src/runtime/time.go

func checkTimers(pp *p, now int64) (rnow, pollUntil int64, ran bool) {

//下一个timer的调度时间

next := pp.timer0When.Load()

//某个timer被修改,且修改的触发时间早于next

nextAdj := pp.timerModifiedEarliest.Load()

if next == 0 || (nextAdj != 0 && nextAdj < next) {

next = nextAdj

}

//没有需要调度的

if next == 0 {

return now, 0, false

}

if now == 0 {

now = nanotime()

}

//当前m不是该p绑定的,也就是说通过sysmon调度的,或者需要删除的timer小于1/4堆内元素

if now < next {

if pp != getg().m.p.ptr() || int(pp.deletedTimers.Load()) <= int(pp.numTimers.Load()/4) {

return now, next, false

}

}

lock(&pp.timersLock)

//四叉堆不为空

if len(pp.timers) > 0 {

//更新pp.timerModifiedEarliest数量

adjusttimers(pp, now)

for len(pp.timers) > 0 {

//运行计时器,主要是调整四叉堆,以及操作状态及,以及最早的timer需要运行的时间

if tw := runtimer(pp, now); tw != 0 {

if tw > 0 {

pollUntil = tw

}

break

}

ran = true

}

}

//四叉堆的维护以及删除过期定时器

if pp == getg().m.p.ptr() && int(pp.deletedTimers.Load()) > len(pp.timers)/4 {

clearDeletedTimers(pp)

}

unlock(&pp.timersLock)

//返回现在时间,以及下一个timer的运行时间,ran为是否有下一个需要运行

return now, pollUntil, ran

}

sysmon调度

调度的地方有很多,只是举例在sysmon里的调度,就是通过timeSleepUntil()函数下一个需要调度的时间

// src/runtime/proc.go

func sysmon() {

//获取pp.timer0When或者timerModifiedEarliest

next := timeSleepUntil()

if next := timeSleepUntil(); next < now {

startm(nil, false)

}

golang select详解

核心数据结构如下,除了default,其它的select{case:}都与chan有关,scase.c则存储的是一个channel,在1.channel详解我们详细了解过chan。下面我们来

看看select的几种情况

// go/src/runtime/select.go

type scase struct {

c *hchan // chan

elem unsafe.Pointer // data element

}

sync once

sync.Once只有一个方法,sync.Do(),通常我们用来调用在多个地方执行保证只执行一次的方法,如初始化,关闭fd等 具体代码如下,通过atomic获取done,为0则抢锁执行do传进来的方法,执行完后将done atomic变成1

type Once struct {

done uint32

m Mutex

}

func (o *Once) Do(f func()) {

if atomic.LoadUint32(&o.done) == 0 {

o.doSlow(f)

}

}

func (o *Once) doSlow(f func()) {

o.m.Lock()

defer o.m.Unlock()

if o.done == 0 {

defer atomic.StoreUint32(&o.done, 1)

f()

}

}

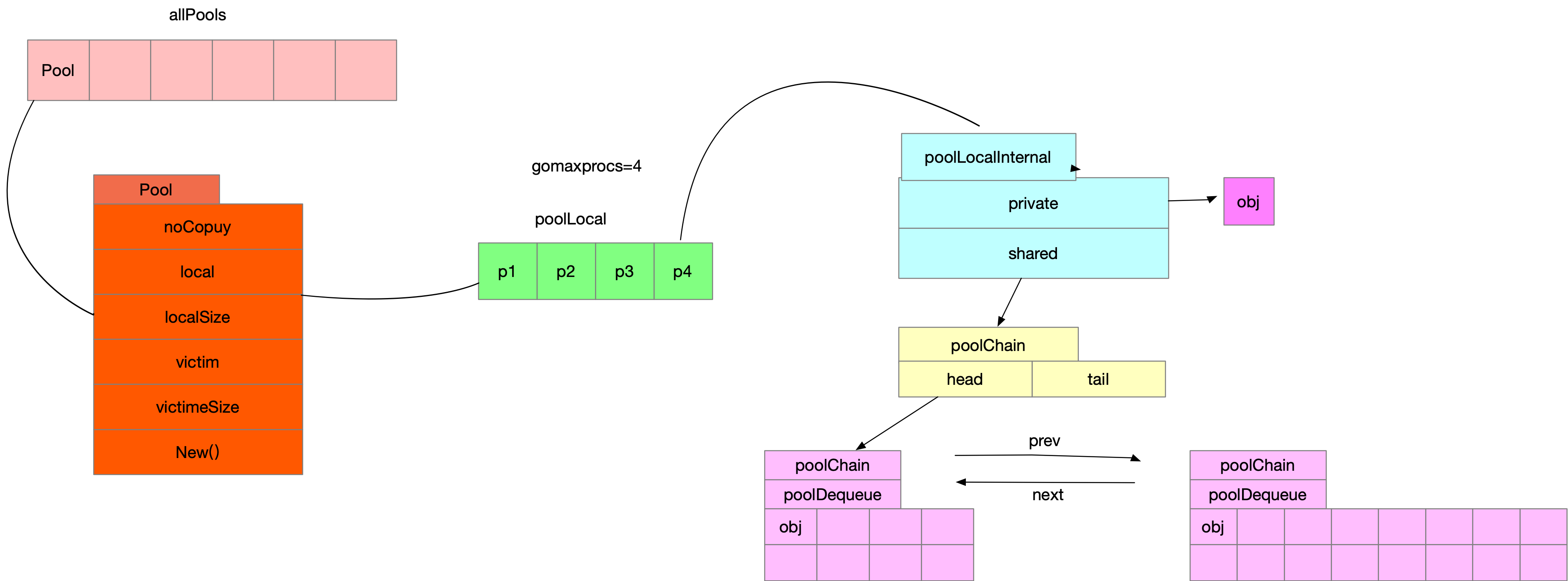

sync.pool

主要为了减少gc,减少gc在mark的时候需要标记的inuse_objects数量过多。通俗点来说就是一个内存池,我们自己实现g et,put。

需要注意的是,仅存在与pool中,在外没有被其它地方引用,可能会被pool删除,下面我们来看看调用sync.Pool具体流程

根据流程图我们先一起梳理下,所有的pool都被存储在allPools这个数组中,注意不要不停的生成新的sync.Pool,allPools是加锁去append

sync.pool init

pool的初始化调用的是pool.poolCleanup的方法,主要逻辑如下,将old里每个p清空,将allpools里p的local,localSize移动到victim,victimSize

然后将allpool移动给oldPools,方便后续清空

func poolCleanup() {

for _, p := range oldPools {

p.victim = nil

p.victimSize = 0

}

for _, p := range allPools {

p.victim = p.local

p.victimSize = p.localSize

p.local = nil

p.localSize = 0

}

oldPools, allPools = allPools, nil

}

pool.get

现在我们来看看get的逻辑

- 通过调用pool.Get->pool.pin->pool.pinSlow获取一个poolLocal,具体代码如下

func (p *Pool) Get() any {

l, pid := p.pin()

}

func (p *Pool) pin() (*poolLocal, int) {

//获取pid,使g绑定p并禁止抢占(本地队列)

pid := runtime_procPin()

s := runtime_LoadAcquintptr(&p.localSize) // load-acquire

l := p.local // load-consume

//如果pid比我本地Pool.localSize小则直接返回

if uintptr(pid) < s {

return indexLocal(l, pid), pid

}

//生成新的poolLocal

return p.pinSlow()

}

func (p *Pool) pinSlow() (*poolLocal, int) {

//解除独占

runtime_procUnpin()

//加锁

allPoolsMu.Lock()

defer allPoolsMu.Unlock()

pid := runtime_procPin()

// poolCleanup won't be called while we are pinned.

s := p.localSize

l := p.local

if uintptr(pid) < s {

return indexLocal(l, pid), pid

}

//将poolLocal追加到allPools数组里

if p.local == nil {

allPools = append(allPools, p)

}

// If GOMAXPROCS changes between GCs, we re-allocate the array and lose the old one.

size := runtime.GOMAXPROCS(0)

//local根据p的个数设置数组长度

local := make([]poolLocal, size)

atomic.StorePointer(&p.local, unsafe.Pointer(&local[0])) // store-release

runtime_StoreReluintptr(&p.localSize, uintptr(size)) // store-release

return &local[pid], pid

}

然后我们接着看后续逻辑

func (p *Pool) Get() any {

l, pid := p.pin()

//最优先元素,类似于p.runnext,或者cpu l1 缓存

x := l.private

l.private = nil

//如果没取到private

if x == nil {

// Try to pop the head of the local shard. We prefer

// the head over the tail for temporal locality of

// reuse.

//去shared取,pool.local[pid].poolLocal.shared

x, _ = l.shared.popHead()

//shared没拿到,去别的pid里对应的poolLocal.shared偷一个

if x == nil {

x = p.getSlow(pid)

}

}

}

我们来看看从别的poolLocal获取内存具体的操作

func (p *Pool) getSlow(pid int) any {

size := runtime_LoadAcquintptr(&p.localSize) // load-acquire

locals := p.local // load-consume

//从别的slow里偷

for i := 0; i < int(size); i++ {

l := indexLocal(locals, (pid+i+1)%int(size))

if x, _ := l.shared.popTail(); x != nil {

return x

}

}

//在victim里找

size = atomic.LoadUintptr(&p.victimSize)

if uintptr(pid) >= size {

return nil

}

locals = p.victim

l := indexLocal(locals, pid)

if x := l.private; x != nil {

l.private = nil

return x

}

//在victim里别的p上偷

for i := 0; i < int(size); i++ {

l := indexLocal(locals, (pid+i)%int(size))

if x, _ := l.shared.popTail(); x != nil {

return x

}

}

// Mark the victim cache as empty for future gets don't bother

// with it.

atomic.StoreUintptr(&p.victimSize, 0)

//都没有返回nil

return nil

}

若果没有从getSlow上获取,还会调用pool.New,也就是我们sync.Pool{New:func}的方法,都拿不到则返回为nil

if x == nil && p.New != nil {

x = p.New()

}

return x

总结下,get的具体逻辑为

- 是否有Pool,没有则生成Pool并添加到runtime.allPools上

- 从Pool.poolLocal[pid].poolLocalInternal.private上拿,拿不到去shared上拿,还拿不到去别的pid上的shared拿

- 再拿不到从别的即将要被清空的Pool.victim上拿,逻辑如上,还拿不到则执行sync.Pool{New:func}。还拿不到则返回nil

pool.put

下面我们再来看看Pool.put,先放到Pool.poolLocal[pid].private,如果有值了,调用Pool.poolLocal[pid].shared.pushHead

pushHead里如果没值,则生成poolChainElt,且val长度为8,如果满了,则扩容,每次创建新的poolChainElt并扩容val长度都会*2,最大10亿多,并将新生成的

poolChainElt通过双向链表挂到之前的poolChainElt后面

// Put adds x to the pool.

func (p *Pool) Put(x any) {

if x == nil {

return

}

//获取一个[pid]poolLocal

l, _ := p.pin()

//[pid]poolLocal.poolLocalInternal.Private为空则赋值

if l.private == nil {

l.private = x

} else {

//放[pid]poolLocal.poolLocalInternal.shared.head上

l.shared.pushHead(x)

}

runtime_procUnpin()

if race.Enabled {

race.Enable()

}

}

//l.shared.pushHead(x)

func (c *poolChain) pushHead(val any) {

d := c.head

//head为空则生成一个poolChainElt,且val为8长度的双向链表节点

if d == nil {

// Initialize the chain.

const initSize = 8 // Must be a power of 2

d = new(poolChainElt)

d.vals = make([]eface, initSize)

c.head = d

storePoolChainElt(&c.tail, d)

}

//放入节点的head

if d.pushHead(val) {

return

}

//没放入成功,满了 则生成下一个链表节点,并将节点容量并乘2

newSize := len(d.vals) * 2

if newSize >= dequeueLimit {

// Can't make it any bigger.

newSize = dequeueLimit

}

d2 := &poolChainElt{prev: d}

d2.vals = make([]eface, newSize)

c.head = d2

storePoolChainElt(&d.next, d2)

//扩容后重新放入

d2.pushHead(val)

}

sync gc部分

同上sync.pool.init 将Pool.local移动到Pool.victim ,将Pool.localSize移动到Pool.victimSize并将上一次的Pool.victim,Pool.victimSize清空

lock 锁实现基础

锁的定义如下,先看下state字段,sema后文会详细介绍

// src/sync/mutex.go

type Mutex struct {

//32位 前29位表示有多少人g等待互斥锁的释放,后三位分别为mutexLocked,mutexWoken,mutexStarving

state int32

//信号量 对应在src/runtime/sema.go上的semTabSize存储,挂着等待锁的g

sema uint32

}

- mutexLocked表示是否已经加锁 1为已加锁

- mutexWoken 表示从正常模式被从唤醒

- mutexStarving 进入饥饿模式

锁正常模式和饥饿模式

正常模式下,锁是先入先出,排队获取锁,新唤醒的g与新创建的g竞争时(这时候占有cpu),大概率拿不到锁,go里面解决办法是出现超过1ms没拿到锁的g,锁进入饥饿模式

饥饿模式下,互斥锁会直接交给等待队列最前面的g,新的g直接丢在队尾,且不能自旋(没有cpu片)

取消条件为队尾的g获取到了锁,或者当前g获取锁1ms内

加锁逻辑

加锁逻辑,如果状态位是0,则改成1然后加锁成功,如果已经有锁的情况下,进入m.lockSlow()逻辑

// src/sync/mutex.go

func (m *Mutex) Lock() {

// Fast path: grab unlocked mutex.

if atomic.CompareAndSwapInt32(&m.state, 0, mutexLocked) {

return

}

// Slow path (outlined so that the fast path can be inlined)

m.lockSlow()

}

如果当前不能加锁,则进入自旋等模式等待锁释放,大体流程如下

- 通过runtime_canSpin判断是否能进入自旋

- 运行在多 CPU 的机器上

- 当前g获取锁进入自旋少于4次

- 至少有个p runq为空

- 通过自旋等待互斥锁的释放

- 计算互斥锁的最新状态

- 更新互斥锁的状态并获取锁

func (m *Mutex) lockSlow() {

//省略自旋获取锁代码

//通过信号量保证只会有一个g获取到锁,也就是我们开头的Mutex.sema

runtime_SemacquireMutex(&m.sema, queueLifo, 1)

}

下面我们看看信号量的逻辑,了解完信号量后接着分析runtime_SemacquireMutex

信号量详解

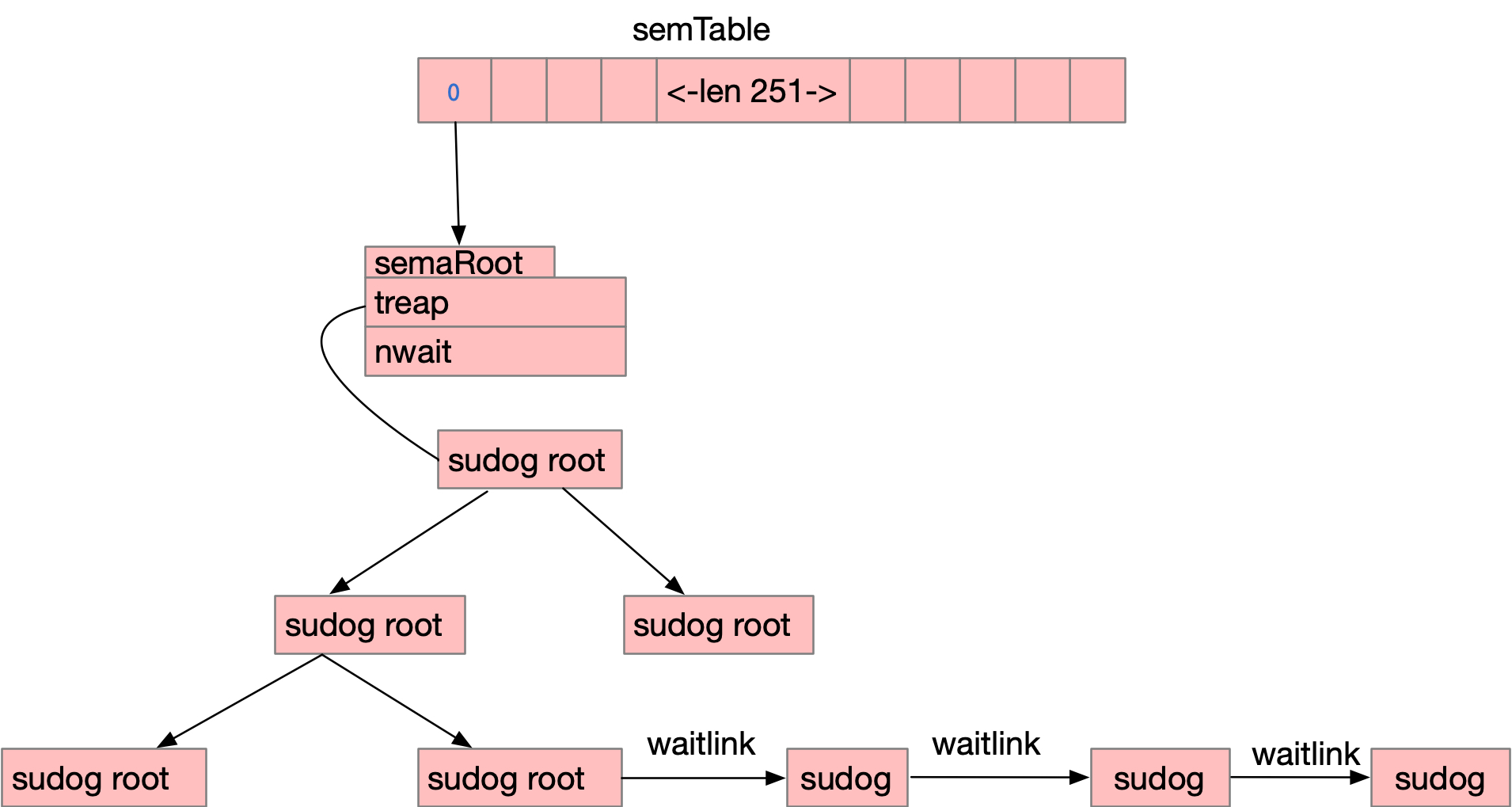

信号量的存储方式如下图,全局一个semtable,semtable是一个251长度的数据,每一个数组元素根据&m.sema hash后,用二叉树存储

比如lock A addr=1,lock b addr = 252,根据hash后,都在semtable[1]里,用树存储,而等待锁lock A的所有的g都通过sudog用

waitlink连接,具体结构图如下

下面我们接着看

下面我们接着看runtime_SemacquireMutex函数

//runtime_SemacquireMutex()->semacquire1()

func semacquire1(addr *uint32, lifo bool, profile semaProfileFlags, skipframes int, reason waitReason) {

//获取当前的g

gp := getg()

//获取一个sudog

s := acquireSudog()

//通过地址hash得到二叉树跟目录

root := semtable.rootFor(addr)

for {

lockWithRank(&root.lock, lockRankRoot)

//root上等待锁的sudog的个数

root.nwait.Add(1)

if cansemacquire(addr) {

root.nwait.Add(-1)

unlock(&root.lock)

break

}

//找到该addr在树上对应的节点

root.queue(addr, s, lifo)

//阻塞gopark,解锁的时候goready恢复

goparkunlock(&root.lock, reason, traceEvGoBlockSync, 4+skipframes)

if s.ticket != 0 || cansemacquire(addr) {

break

}

}

releaseSudog(s)

}

信号量解锁详解

具体代码与加锁的信号量处理差不多,简略代码如下,有兴趣的可以看看原来代码

// src/runtime/sema.go

func semrelease1(addr *uint32, handoff bool, skipframes int) {

readyWithTime(s, 5+skipframes)

}

func readyWithTime(s *sudog, traceskip int) {

if s.releasetime != 0 {

s.releasetime = cputicks()

}

goready(s.g, traceskip)

}

读写锁RWMutex

我们依次分析获取写锁和读锁的实现原理

type RWMutex struct {

w Mutex // 复用读写锁

writerSem uint32 // 写等待读信号量

readerSem uint32 // 读等待写信号量

readerCount atomic.Int32 // 执行的读操作数量

readerWait atomic.Int32 // 写操作被阻塞时等待的读操作个数

}

写锁

复用mutex锁,分几种情况

- 已有写锁,rw.w.Lock()将会阻塞,上文mutex逻辑,同时将readerCount设置最小值

- readerCount有值,说明有读锁,调用runtime_SemacquireRWMutex休眠,等待读锁释放writerSem信号量

func (rw *RWMutex) Lock() {

rw.w.Lock()

r := rw.readerCount.Add(-rwmutexMaxReaders) + rwmutexMaxReaders

if r != 0 && rw.readerWait.Add(r) != 0 {

runtime_SemacquireRWMutex(&rw.writerSem, false, 0)

}

}

读锁

readerCount为负数(-1<<30),则说明有写锁

直接调用runtime_SemacquireRWMutexR,如果有写锁,会阻塞readerSem

如果该方法的结果为非负数,则通过信号量readerSem阻塞,后续等待Unlock解写锁唤醒

func (rw *RWMutex) RLock() {

if rw.readerCount.Add(1) < 0 {

// A writer is pending, wait for it.

runtime_SemacquireRWMutexR(&rw.readerSem, false, 0)

}

}

waitgroup

waitgroup比较简单,当add的总数跟done的总数一样,通过信号量唤醒WaitGroup.wait()方法

有兴趣的可以了解下semtable后阅读下源码,挺简单的

type WaitGroup struct {

noCopy noCopy

//类似锁,需要注意高32位低32有大小端问题 add总数-wait总数

state atomic.Uint64 // high 32 bits are counter, low 32 bits are waiter count.

//存储在semtable上,上文有详细讲解

sema uint32

}

SingleFlight

//todo 时间关系未更完有空补充完

SingleFlight主要用于多个并发操作,只执行其中一个,例如多个get操作,防止缓存击穿

主要就是一个map加锁,多个操作通过map只执行一次,通过WaitGroup等待请求的返回

type Group struct {

mu sync.Mutex // protects m

m map[string]*call // lazily initialized

}

type call struct {

wg sync.WaitGroup

val any

err error

//累计多少个请求

dups int

chans []chan<- Result

}

map详解

本文基于golang 1.22版本

哈希表的实现方式

- 开放寻址法(感兴趣可自行查找了解)

- 双哈希法 开放寻址的改良,多次哈希减少冲突

- 拉链法 将一个bucket实现成一个链表,hash后落在同一个bucket里的key会插入链表来解决哈希冲突,golang是采用的链表解决冲突

下文我们将从map的数据结构,初始化,增删改查来分析源码是怎么实现的

如何查找map创建源码

golang代码如下,我们使用go tool compile -N -l -S main.go查看汇编代码,如下,小于8的通过runtime.makemap_small,大于8的runtime.makemap

还需注意,make(map[xx]xx,int64)这种形式会被特殊处理,最终也调用runtime.makemap,我们以runtime.makemap来分析

package main

var m1 = make(map[int]int, 0)

var m2 = make(map[int]int, 10)

...

(main.go:3) CALL runtime.makemap_small(SB)

(main.go:4) CALL runtime.makemap(SB)

...

函数的定义如下

//cmd/compile/internal/typecheck/_builtin/runtime.go

func makemap64(mapType *byte, hint int64, mapbuf *any) (hmap map[any]any)

func makemap(mapType *byte, hint int, mapbuf *any) (hmap map[any]any)

func makemap_small() (hmap map[any]any)

具体我们来看makemap这个函数

//runtime/map.go

func makemap(t *maptype, hint int, h *hmap) *hmap {

//@step1 判断是否溢出

mem, overflow := math.MulUintptr(uintptr(hint), t.Bucket.Size_)

if overflow || mem > maxAlloc {

hint = 0

}

//@step2 初始化hmap结构

// initialize Hmap

if h == nil {

h = new(hmap)

}

//随机哈希种子,防止碰撞共计

h.hash0 = uint32(rand())

// Find the size parameter B which will hold the requested # of elements.

// For hint < 0 overLoadFactor returns false since hint < bucketCnt.

B := uint8(0)

//@step3 设置桶的数量(2^B方个筒)

for overLoadFactor(hint, B) {

B++

}

h.B = B

//@step4 桶数量大于0 初始化桶内存

// allocate initial hash table

// if B == 0, the buckets field is allocated lazily later (in mapassign)

// If hint is large zeroing this memory could take a while.

if h.B != 0 {

var nextOverflow *bmap

h.buckets, nextOverflow = makeBucketArray(t, h.B, nil)

if nextOverflow != nil {

h.extra = new(mapextra)

h.extra.nextOverflow = nextOverflow

}

}

return h

}

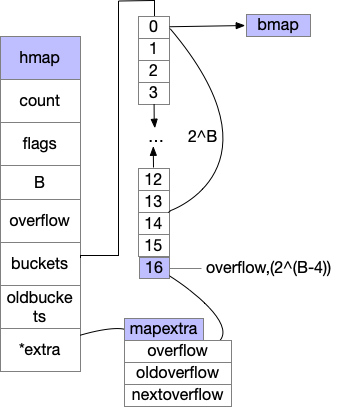

我们总结下,创建map的代码主要是返回了一个底层的hmap对象,具体的我们来看下hmap的结构体,主要的结构如下,bmap结构下文有图

//runtime/map.go

type hmap struct {

//总元素

count int // # live cells == size of map. Must be first (used by len() builtin)

//状态标位,如扩容中,正在被写入等

flags uint8

//桶的个数=2^B

B uint8 // log_2 of # of buckets (can hold up to loadFactor * 2^B items)

uint16 // approximate number of overflow buckets; see incrnoverflow for details

//哈希种子,防止哈希碰撞共计

hash0 uint32 // hash seed

//存储桶的数组

buckets unsafe.Pointer // array of 2^B Buckets. may be nil if count==0.

//发生扩容后 存的旧的bucket数组

oldbuckets unsafe.Pointer // previous bucket array of half the size, non-nil only when growing

nevacuate uintptr // progress counter for evacuation (buckets less than this have been evacuated)

//扩展字段

extra *mapextra // optional fields

}

我们来总结下map的初始化

- 判断长度调用makemap/makemap_small

- 创建hmap结构体,桶的个数为2^B

- 通过

makeBucketArray分配具体数据 - 分配扩展桶,具体个数我们来看

makeBucketArray的具体逻辑

makeBucketArray 的创建

- 当桶的个数小雨2^4时,由于数据较少,溢出桶没必要创建

- 多余2^4时,会创建2^(B-4)个溢出桶

- 溢出筒则添加到了buckets数组最后边,比如B=4,创建了16个桶+1个溢出筒,申请的数组长度为17

//runtime/map.go 省略了部分不相干代码

func makeBucketArray(t *maptype, b uint8, dirtyalloc unsafe.Pointer) (buckets unsafe.Pointer, nextOverflow *bmap) {

base := bucketShift(b)

nbuckets := base //桶的总个数

if b >= 4 {

nbuckets += bucketShift(b - 4) //添加了溢出筒

sz := t.Bucket.Size_ * nbuckets

up := roundupsize(sz, t.Bucket.PtrBytes == 0)

if up != sz {

nbuckets = up / t.Bucket.Size_

}

}

if dirtyalloc == nil {

buckets = newarray(t.Bucket, int(nbuckets))

}

//有溢出桶的情况下,由代码将buckers与overflow分割

if base != nbuckets {

nextOverflow = (*bmap)(add(buckets, base*uintptr(t.BucketSize)))

last := (*bmap)(add(buckets, (nbuckets-1)*uintptr(t.BucketSize)))

last.setoverflow(t, (*bmap)(buckets))

}

return buckets, nextOverflow

}

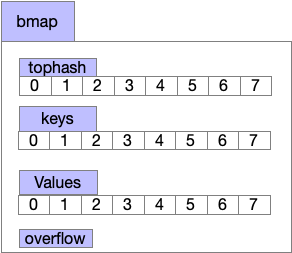

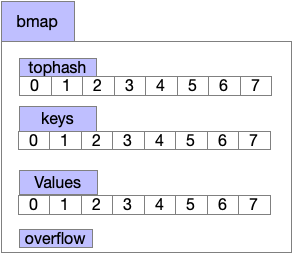

我们知道map的元素是放在bucket数组里,而具体存入的数据结构bmap如下图,我们放到map写入元素来讲解

map的读

map读写删除元素,逻辑整体差不多,都是找到对应的bucket桶然后去bmap里找,我们先看下读的相关逻辑,涉及到寻找key在map里对应的位置,

写仅在读的逻辑增加了map扩容,map对应的定义如下,根据key的不同,会调对应的mapaccess1(读),我们以map[any]any为例

//cmd/compile/internal/typecheck/_builtin/runtime.go

// *byte is really *runtime.Type

func makemap64(mapType *byte, hint int64, mapbuf *any) (hmap map[any]any)

func makemap(mapType *byte, hint int, mapbuf *any) (hmap map[any]any)

func makemap_small() (hmap map[any]any)

//读相关

func mapaccess1(mapType *byte, hmap map[any]any, key *any) (val *any)

func mapaccess1_fast32(mapType *byte, hmap map[any]any, key uint32) (val *any)

func mapaccess1_fast64(mapType *byte, hmap map[any]any, key uint64) (val *any)

func mapaccess1_faststr(mapType *byte, hmap map[any]any, key string) (val *any)

func mapaccess1_fat(mapType *byte, hmap map[any]any, key *any, zero *byte) (val *any)

func mapaccess2(mapType *byte, hmap map[any]any, key *any) (val *any, pres bool)

func mapaccess2_fast32(mapType *byte, hmap map[any]any, key uint32) (val *any, pres bool)

func mapaccess2_fast64(mapType *byte, hmap map[any]any, key uint64) (val *any, pres bool)

func mapaccess2_faststr(mapType *byte, hmap map[any]any, key string) (val *any, pres bool)

func mapaccess2_fat(mapType *byte, hmap map[any]any, key *any, zero *byte) (val *any, pres bool)

//写相关

func mapassign(mapType *byte, hmap map[any]any, key *any) (val *any)

func mapassign_fast32(mapType *byte, hmap map[any]any, key uint32) (val *any)

func mapassign_fast32ptr(mapType *byte, hmap map[any]any, key unsafe.Pointer) (val *any)

func mapassign_fast64(mapType *byte, hmap map[any]any, key uint64) (val *any)

func mapassign_fast64ptr(mapType *byte, hmap map[any]any, key unsafe.Pointer) (val *any)

func mapassign_faststr(mapType *byte, hmap map[any]any, key string) (val *any)

func mapiterinit(mapType *byte, hmap map[any]any, hiter *any)

func mapdelete(mapType *byte, hmap map[any]any, key *any)

func mapdelete_fast32(mapType *byte, hmap map[any]any, key uint32)

func mapdelete_fast64(mapType *byte, hmap map[any]any, key uint64)

func mapdelete_faststr(mapType *byte, hmap map[any]any, key string)

func mapiternext(hiter *any)

func mapclear(mapType *byte, hmap map[any]any)

//go 代码如下

var m1 = make(map[any]any, 10)

m1[10] = "20"

a := m1[10]

//对应的汇编如下

//CALL runtime.mapassign(SB) 写

//CALL runtime.mapaccess1(SB) 读

//runtime/map.go

//省略部分判断代码

func mapaccess1(t *maptype, h *hmap, key unsafe.Pointer) unsafe.Pointer {

//根据key,以及初始化map随机哈希种子hash0,生成哈希

hash := t.Hasher(key, uintptr(h.hash0))

//例如B=4,则m=1<<4-1

m := bucketMask(h.B)

//寻找hmap.buckes里对应的桶,hash&m,例如11&01=1,一半hash长度远超m的长度,而m取决于buckets的个数,无需关心hash具体的值

b := (*bmap)(add(h.buckets, (hash&m)*uintptr(t.BucketSize)))

//有旧的桶,扩容逻辑,下文详细讲解

if c := h.oldbuckets; c != nil {

if !h.sameSizeGrow() {

// There used to be half as many buckets; mask down one more power of two.

m >>= 1

}

oldb := (*bmap)(add(c, (hash&m)*uintptr(t.BucketSize)))

if !evacuated(oldb) {

b = oldb

}

}

//64位hash>>8*8-8,获取前8位 如(top)xxx....xxxxxx

top := tophash(hash)

bucketloop:

for ; b != nil; b = b.overflow(t) {

//bucketCnt 8个

for i := uintptr(0); i < bucketCnt; i++ {

//根据top选bmap里对应的key,value

if b.tophash[i] != top {

if b.tophash[i] == emptyRest {

break bucketloop

}

continue

}

//找到了key

k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.KeySize))

if t.IndirectKey() {

k = *((*unsafe.Pointer)(k))

}

//与我们要找的key相同,返回值

if t.Key.Equal(key, k) {

e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.KeySize)+i*uintptr(t.ValueSize))

if t.IndirectElem() {

e = *((*unsafe.Pointer)(e))

}

return e

}

}

}

return unsafe.Pointer(&zeroVal[0])

}

map的写

上文我们详细分析了map的读,写仅在该基础里添加替换以及扩容,我们仅分析扩容

- 具体过程为达到最大负载系数或溢出桶太多执行扩容,再通过growWork迁移旧bucket里的元素

//runtime/map.go

func mapassign(t *maptype, h *hmap, key unsafe.Pointer) unsafe.Pointer {

again:

if h.growing() {

growWork(t, h, bucket)

}

//h.count+1>8 && h.count > bucket*6.5 || bucket count < noverflow < 2^15

if !h.growing() && (overLoadFactor(h.count+1, h.B) || tooManyOverflowBuckets(h.noverflow, h.B)) {

hashGrow(t, h)

goto again // Growing the table invalidates everything, so try again

}

}

执行hashGrow扩容,逻辑跟map初始化的逻辑蕾丝,将旧的buckets放入h.oldbuckets,重新初始化一个新的buckets数组

map元素的迁移在 growWork(t, h, bucket)中

//runtime/map.go

func hashGrow(t *maptype, h *hmap) {

// If we've hit the load factor, get bigger.

// Otherwise, there are too many overflow buckets,

// so keep the same number of buckets and "grow" laterally.

bigger := uint8(1)

if !overLoadFactor(h.count+1, h.B) {

bigger = 0

h.flags |= sameSizeGrow

}

oldbuckets := h.buckets

newbuckets, nextOverflow := makeBucketArray(t, h.B+bigger, nil)

flags := h.flags &^ (iterator | oldIterator)

if h.flags&iterator != 0 {

flags |= oldIterator

}

// commit the grow (atomic wrt gc)

h.B += bigger

h.flags = flags

h.oldbuckets = oldbuckets

h.buckets = newbuckets

h.nevacuate = 0

h.noverflow = 0

if h.extra != nil && h.extra.overflow != nil {

// Promote current overflow buckets to the old generation.

if h.extra.oldoverflow != nil {

throw("oldoverflow is not nil")

}

h.extra.oldoverflow = h.extra.overflow

h.extra.overflow = nil

}

if nextOverflow != nil {

if h.extra == nil {

h.extra = new(mapextra)

}

h.extra.nextOverflow = nextOverflow

}

// the actual copying of the hash table data is done incrementally

// by growWork() and evacuate().

}

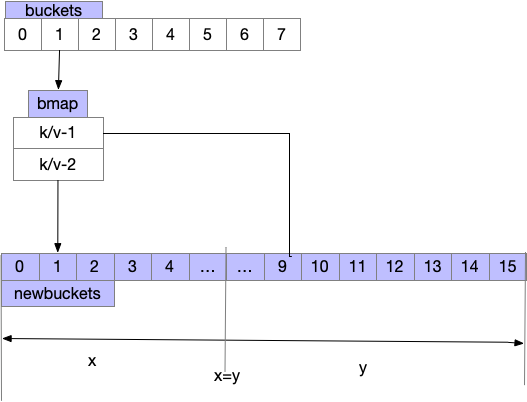

growWork为扩容最后一步,执行扩容后map里元素的整理,大体逻辑如下图

- 需注意每次赋值/删除 触发growWork仅拌匀当前命中的bucket,顺带多搬运一个bucket

- 将一个bucket里的数据分流到两个bucket里

- advanceEvacuationMark 哈希的计数器,扩容完成后清理oldbuckets

func growWork(t *maptype, h *hmap, bucket uintptr) {

evacuate(t, h, bucket&h.oldbucketmask())

if h.growing() {

evacuate(t, h, h.nevacuate)

}

}

func evacuate(t *maptype, h *hmap, oldbucket uintptr) {

//旧的buckets

b := (*bmap)(add(h.oldbuckets, oldbucket*uintptr(t.BucketSize)))

newbit := h.noldbuckets()

//x,y如上图将oldbuckets迁移到buckets过程,省略了部分代码

if !evacuated(b) {

var xy [2]evacDst

x := &xy[0]

x.b = (*bmap)(add(h.buckets, oldbucket*uintptr(t.BucketSize)))

x.k = add(unsafe.Pointer(x.b), dataOffset)

x.e = add(x.k, bucketCnt*uintptr(t.KeySize))

if !h.sameSizeGrow() {

// Only calculate y pointers if we're growing bigger.

// Otherwise GC can see bad pointers.

y := &xy[1]

y.b = (*bmap)(add(h.buckets, (oldbucket+newbit)*uintptr(t.BucketSize)))

y.k = add(unsafe.Pointer(y.b), dataOffset)

y.e = add(y.k, bucketCnt*uintptr(t.KeySize))

}

}

if oldbucket == h.nevacuate {

advanceEvacuationMark(h, t, newbit)

}

}

切片详解

本文基于go1.22.0版本 创建切片,以及扩容调用的是如下runtime里函数,定义如下,我们先过下初始化的过程,然后重点分析下扩容过程

//cmd/compile/internal/typecheck/_builtin/runtime.go

func makeslice(typ *byte, len int, cap int) unsafe.Pointer

func makeslice64(typ *byte, len int64, cap int64) unsafe.Pointer

func makeslicecopy(typ *byte, tolen int, fromlen int, from unsafe.Pointer) unsafe.Pointer

func growslice(oldPtr *any, newLen, oldCap, num int, et *byte) (ary []any)

func unsafeslicecheckptr(typ *byte, ptr unsafe.Pointer, len int64)

func panicunsafeslicelen()

func panicunsafeslicenilptr()

func unsafestringcheckptr(ptr unsafe.Pointer, len int64)

func panicunsafestringlen()

func panicunsafestringnilptr()

切片创建

本文举例为堆上内存分配 切片的创建比较简单,根据len计算出需要的cap,使用mallocgc分配内存,整体都比较简单就只贴代码简单分析了

//runtime/slice.go

type slice struct {

array unsafe.Pointer //数组地址

len int //实际长度

cap int //根据len向上取2^n

}

// A notInHeapSlice is a slice backed by runtime/internal/sys.NotInHeap memory.

type notInHeapSlice struct {

array *notInHeap

len int

cap int

}

//runtime/slice.go

func makeslice(et *_type, len, cap int) unsafe.Pointer {

//根据cap(大于等于len)计算是否溢出

mem, overflow := math.MulUintptr(et.Size_, uintptr(cap))

if overflow || mem > maxAlloc || len < 0 || len > cap {

mem, overflow := math.MulUintptr(et.Size_, uintptr(len))

if overflow || mem > maxAlloc || len < 0 {

panicmakeslicelen()

}

panicmakeslicecap()

}

//实际分配内存

return mallocgc(mem, et, true)

}

切片的扩容

//go:linkname reflect_growslice reflect.growslice

func reflect_growslice(et *_type, old slice, num int) slice {

num -= old.cap - old.len // preserve memory of old[old.len:old.cap]

new := growslice(old.array, old.cap+num, old.cap, num, et)

if et.PtrBytes == 0 {

oldcapmem := uintptr(old.cap) * et.Size_

newlenmem := uintptr(new.len) * et.Size_

memclrNoHeapPointers(add(new.array, oldcapmem), newlenmem-oldcapmem)

}

new.len = old.len // preserve the old length

return new

}

growslice为slice扩容的具体逻辑,下面我们一块来看下对应代码

- oldPtr原始的数组

- newLen = old.cap+num

//runtime/slice.go

func growslice(oldPtr unsafe.Pointer, newLen, oldCap, num int, et *_type) slice {

oldLen := newLen - num

if et.Size_ == 0 {

return slice{unsafe.Pointer(&zerobase), newLen, newLen}

}

//计算扩容切片的长度,小于256,双倍扩容,否则1.25倍

newcap := nextslicecap(newLen, oldCap)

var overflow bool

var lenmem, newlenmem, capmem uintptr

noscan := et.PtrBytes == 0

//分配具体内存大小,省略部分代码

switch {

case et.Size_ == 1:

case et.Size_ == goarch.PtrSize:

case isPowerOfTwo(et.Size_):

default:

lenmem = uintptr(oldLen) * et.Size_

newlenmem = uintptr(newLen) * et.Size_

capmem, overflow = math.MulUintptr(et.Size_, uintptr(newcap))

capmem = roundupsize(capmem, noscan)

newcap = int(capmem / et.Size_)

capmem = uintptr(newcap) * et.Size_

}

//分配内存

var p unsafe.Pointer

if et.PtrBytes == 0 {

p = mallocgc(capmem, nil, false)

memclrNoHeapPointers(add(p, newlenmem), capmem-newlenmem)

} else {

p = mallocgc(capmem, et, true)

if lenmem > 0 && writeBarrier.enabled {

bulkBarrierPreWriteSrcOnly(uintptr(p), uintptr(oldPtr), lenmem-et.Size_+et.PtrBytes, et)

}

}

//将旧的内存移动到新内存地址上

memmove(p, oldPtr, lenmem)

return slice{p, newLen, newcap}

}

前言

本系列基于golang 1.20.4版本

我们先查找下启动入口,编写一个最简单的demo查找下启动入口,执行

go build demo.go后,得到执行文件,然后我们使用readelf查找到启动的内存地址(0x456c40),使用dlv寻找到启动代码在rt0_linux_amd64.s里。

//demo.go

package main

func main(){

println("hello")

}

root@node1:~/work/go/demo# readelf -h demo

ELF 头:

Magic: 7f 45 4c 46 02 01 01 00 00 00 00 00 00 00 00 00

类别: ELF64

数据: 2 补码,小端序 (little endian)

Version: 1 (current)

OS/ABI: UNIX - System V

ABI 版本: 0

类型: EXEC (可执行文件)

系统架构: Advanced Micro Devices X86-64

版本: 0x1

入口点地址: 0x456c40

程序头起点: 64 (bytes into file)

Start of section headers: 456 (bytes into file)

标志: 0x0

Size of this header: 64 (bytes)

Size of program headers: 56 (bytes)

Number of program headers: 7

Size of section headers: 64 (bytes)

Number of section headers: 23

Section header string table index: 3

root@node1:~/work/go/demo# dlv exec demo

Type 'help' for list of commands.

(dlv) b *0x456c40

Breakpoint 1 set at 0x456c40 for _rt0_amd64_linux() /usr/local/go/src/runtime/rt0_linux_amd64.s:8

以linux amd为例,启动文件如下如下

// ~go/src/runtime/rt0_linux_amd64.s

#include "textflag.h"

TEXT _rt0_amd64_linux(SB),NOSPLIT,$-8

JMP _rt0_amd64(SB)

TEXT _rt0_amd64_linux_lib(SB),NOSPLIT,$0

JMP _rt0_amd64_lib(SB)

调用了我们的_rt0_amd64,我们继续看_rt0_amd64做了哪些事

// ~go/src/runtime/asan_amd64.s

//为了方便读者,多余的都删除,感兴趣可以找源文件阅读

TEXT _rt0_amd64(SB),NOSPLIT,$-8

MOVQ 0(SP), DI // argc

LEAQ 8(SP), SI // argv

JMP runtime·rt0_go(SB)

TEXT runtime·rt0_go(SB),NOSPLIT|NOFRAME|TOPFRAME,$0

{......}

//argc,argv 作为操作系统的参数传递给args函数

MOVQ DI, AX // argc

MOVQ SI, BX // argv

// create istack out of the given (operating system) stack.

// _cgo_init may update stackguard.

MOVQ $runtime·g0(SB), DI //// 初始化 g0 执行栈

{......}

//注意着下面四个调用,我们后文基于这4个

//类型检查 在src/runtime/runtime1.go->check()方法,感兴趣可以自己查看

CALL runtime·check(SB)

CALL runtime·args(SB)

CALL runtime·osinit(SB)

CALL runtime·schedinit(SB)

// create a new goroutine to start program

MOVQ $runtime·mainPC(SB), AX // entry

// start this M

CALL runtime·newproc(SB)

CALL runtime·mstart(SB)

CALL runtime·abort(SB) // mstart should never return

上面_rt0_amd64就是我们启动的主流程汇编调用,下面我们来分析下每个调用具体干了啥

1.1 runtime.args(SB)

设置argv,auxv

// src/runtime/runtime1.go

func args(c int32, v **byte) {

argc = c

argv = v

sysargs(c, v)

}

func sysargs(argc int32, argv **byte) {

// skip over argv, envp to get to auxv

for argv_index(argv, n) != nil {

n++

}

if pairs := sysauxv(auxvp[:]); pairs != 0 {

auxv = auxvp[: pairs*2 : pairs*2]

return

}

{......}

pairs := sysauxv(auxvreadbuf[:])

}

//我们主要看sysauxv 方法

func sysauxv(auxv []uintptr) (pairs int) {

var i int

for ; auxv[i] != _AT_NULL; i += 2 {

tag, val := auxv[i], auxv[i+1]

switch tag {

case _AT_RANDOM:

// The kernel provides a pointer to 16-bytes

// worth of random data.

startupRandomData = (*[16]byte)(unsafe.Pointer(val))[:]

case _AT_PAGESZ:

//读取内存也大小,在第三讲go内存管理我们会再讲该变量

physPageSize = val

case _AT_SECURE:

secureMode = val == 1

}

archauxv(tag, val)

vdsoauxv(tag, val)

}

return i / 2

}

1.2 runtime·osinit(SB)

完成对 CPU 核心数的获取,以及设置内存页大小,特别注意!!!

在Container里获取到的runtime.NumCPU()=ncpu是主机的, 可通过https://github.com/uber-go/automaxprocs获取容器cpu,

有兴趣的了解的读者可以可以看看容器cgroup相关docker技术详解

func osinit() {

//核心的逻辑sched_getaffinit获取一个数据一堆计算后最终得到n cpu个数

//在sys_linux_amd64.s用汇编产生系统调用SYS_sched_getaffinity

//有兴趣可以搜索下SYS_sched_getaffinity

ncpu = getproccount()

//getHugePageSize() 提高内存管理的性能透明大页

//root@node1:~# cat /sys/kernel/mm/transparent_hugepage/hpage_pmd_size

//2097152

physHugePageSize = getHugePageSize()

{......}

osArchInit()

}

1.3 runtime·schedinit(SB)

主要负责各种运行时组件初始化工作

// src/runtime/proc.go

// The bootstrap sequence is:

//

// call osinit

// call schedinit

// make & queue new G

// call runtime·mstart

//

// The new G calls runtime·main.

func schedinit() {

// raceinit must be the first call to race detector.

// In particular, it must be done before mallocinit below calls racemapshadow.

gp := getg()

if raceenabled {

//race检测初始化

gp.racectx, raceprocctx0 = raceinit()

}

//最大m数量,包含状态未die的m

sched.maxmcount = 10000

//gc里的stw

// The world starts stopped.

worldStopped()

//栈初始化

stackinit()

//内存初始化

mallocinit()

fastrandinit() // must run before mcommoninit

//初始化当前m

mcommoninit(gp.m, -1)

//gc初始化,及三色标记法

gcinit()

//上次网络轮训的时间,网络部分讲

sched.lastpoll.Store(nanotime())

// 通过CPU核心数和 GOMAXPROCS 环境变量确定 P 的数量

procs := ncpu

if n, ok := atoi32(gogetenv("GOMAXPROCS")); ok && n > 0 {

procs = n

}

//关闭stw

// World is effectively started now, as P's can run.

worldStarted()

}

1.4 runtime·mstart(SB)

主goroutine的启动,及g0

main() {

mp := getg().m

// Max stack size is 1 GB on 64-bit, 250 MB on 32-bit.

// Using decimal instead of binary GB and MB because

// they look nicer in the stack overflow failure message.

// 执行栈最大限制:1GB(64位系统)或者 250MB(32位系统)

if goarch.PtrSize == 8 {

maxstacksize = 1000000000

} else {

maxstacksize = 250000000

}

// An upper limit for max stack size. Used to avoid random crashes

// after calling SetMaxStack and trying to allocate a stack that is too big,

// since stackalloc works with 32-bit sizes.

maxstackceiling = 2 * maxstacksize

if GOARCH != "wasm" { // no threads on wasm yet, so no sysmon

//启动监控用于垃圾回收,抢占调度

systemstack(func() {

newm(sysmon, nil, -1)

})

}

//锁死主线程,例如我们在调用c代码时候,goroutine需要独占线程,可用该方法独占m

// Lock the main goroutine onto this, the main OS thread,

// during initialization. Most programs won't care, but a few

// do require certain calls to be made by the main thread.

// Those can arrange for main.main to run in the main thread

// by calling runtime.LockOSThread during initialization

// to preserve the lock.

lockOSThread()

//执行init函数,编译器把包中所有的init函数存在runtime_inittasks里

doInit(runtime_inittasks) // Must be before defer.

// 启动垃圾回收器后台操作

gcenable()

needUnlock = false

unlockOSThread()

if isarchive || islibrary {

// A program compiled with -buildmode=c-archive or c-shared

// has a main, but it is not executed.

return

}

// 执行用户main包中的 main函数

fn := main_main // make an indirect call, as the linker doesn't know the address of the main package when laying down the runtime

fn()

if raceenabled {

//开启data -race的退出

runExitHooks(0) // run hooks now, since racefini does not return

racefini()

}

//退出并运行退出hook

runExitHooks(0)

exit(0)

}

1.5 总结

通过上文分析我们得知了go程序从runtime.rt0_amd64*(各个平台汇编文件不一样)启动,然后转到runtime.rt0_go调用,主要检查参数,以及设置cpu核心和内存

页大小,随后在schedinit中,对整个程序进行初始化,最后通过newproc和mstart由点读起转换g0执行

在g0中,systemstack负责后台监控,runtime_init运行初始化函数,main_main运行用户态main函数。

下面我们再来看看init的执行顺序

2 init执行顺序

由上文我们doInit(runtime_inittasks)得知,函数init会被linker存储到runtime_inittasks里,具体逻辑为

计算出执行它们的良好顺序,并发出该顺序供运行时使用,其次根据调用链,A倒入B包则会先初始化B包,

最后此函数计算所有 inittask 记录的排序,以便该顺序尊重所有依赖项,并在给定该限制的情况下,按字典顺序对 inittask 进行排序。

对比之前版本,现在按字典序排序,完整代码在如下两个文件中

// src/cmd/link/internal/ld/inittask.go

// cmd/compile/internal/pkginit/init.go

// Inittasks finds inittask records, figures out a good

// order to execute them in, and emits that order for the

// runtime to use.

//

// An inittask represents the initialization code that needs

// to be run for a package. For package p, the p..inittask

// symbol contains a list of init functions to run, both

// explicit user init functions and implicit compiler-generated

// init functions for initializing global variables like maps.

//

// In addition, inittask records have dependencies between each

// other, mirroring the import dependencies. So if package p

// imports package q, then there will be a dependency p -> q.

// We can't initialize package p until after package q has

// already been initialized.

//

// Package dependencies are encoded with relocations. If package

// p imports package q, then package p's inittask record will

// have a R_INITORDER relocation pointing to package q's inittask

// record. See cmd/compile/internal/pkginit/init.go.

//

// This function computes an ordering of all of the inittask

// records so that the order respects all the dependencies,

// and given that restriction, orders the inittasks in

// lexicographic order.

func (ctxt *Link) inittasks() {

switch ctxt.BuildMode {

case BuildModeExe, BuildModePIE, BuildModeCArchive, BuildModeCShared:

// Normally the inittask list will be run on program startup.

ctxt.mainInittasks = ctxt.inittaskSym("main..inittask", "go:main.inittasks")

case BuildModePlugin:

// For plugins, the list will be run on plugin load.

ctxt.mainInittasks = ctxt.inittaskSym(fmt.Sprintf("%s..inittask", objabi.PathToPrefix(*flagPluginPath)), "go:plugin.inittasks")

// Make symbol local so multiple plugins don't clobber each other's inittask list.

ctxt.loader.SetAttrLocal(ctxt.mainInittasks, true)

case BuildModeShared:

// Nothing to do. The inittask list will be built by

// the final build (with the -linkshared option).

default:

Exitf("unhandled build mode %d", ctxt.BuildMode)

}

// If the runtime is one of the packages we are building,

// initialize the runtime_inittasks variable.

ldr := ctxt.loader

if ldr.Lookup("runtime.runtime_inittasks", 0) != 0 {

t := ctxt.inittaskSym("runtime..inittask", "go:runtime.inittasks")

// This slice header is already defined in runtime/proc.go, so we update it here with new contents.

sh := ldr.Lookup("runtime.runtime_inittasks", 0)

sb := ldr.MakeSymbolUpdater(sh)

sb.SetSize(0)

sb.SetType(sym.SNOPTRDATA) // Could be SRODATA, but see issue 58857.

sb.AddAddr(ctxt.Arch, t)

sb.AddUint(ctxt.Arch, uint64(ldr.SymSize(t)/int64(ctxt.Arch.PtrSize)))

sb.AddUint(ctxt.Arch, uint64(ldr.SymSize(t)/int64(ctxt.Arch.PtrSize)))

}

}

1 linux cfs调度

在讲go调度前,我们大概linux进程调度是怎么做的,不感兴趣可跳到章节2,不影响整体阅读 感兴趣的建议阅读

Linux CFS 调度器:原理、设计与内核实现

内核CFS文档

1.1 csf概述

CFS Completely Fair Scheduler简称,即完全公平调度器

假如我们有一个cpu,有100%的使用权,有两个task在运行,cgroup设定各使用50%,cfs则负责讲cpu时间片分给这两个task(调度线程)

所以cfs就是维护进程时间方面的平衡,给进程分配相当数量的处理器

1.2 cfs大概实现原理

所有需要调度的进程(也可以是线程)都被存放在vruntime红黑树上,值为进程的虚拟运行时间,调度后会根据公式 vruntime = 实际运行时间*1024 / 进程权重来更新值,即插入红黑树最右侧,每次调度都会从最左侧取值

2 go schedule

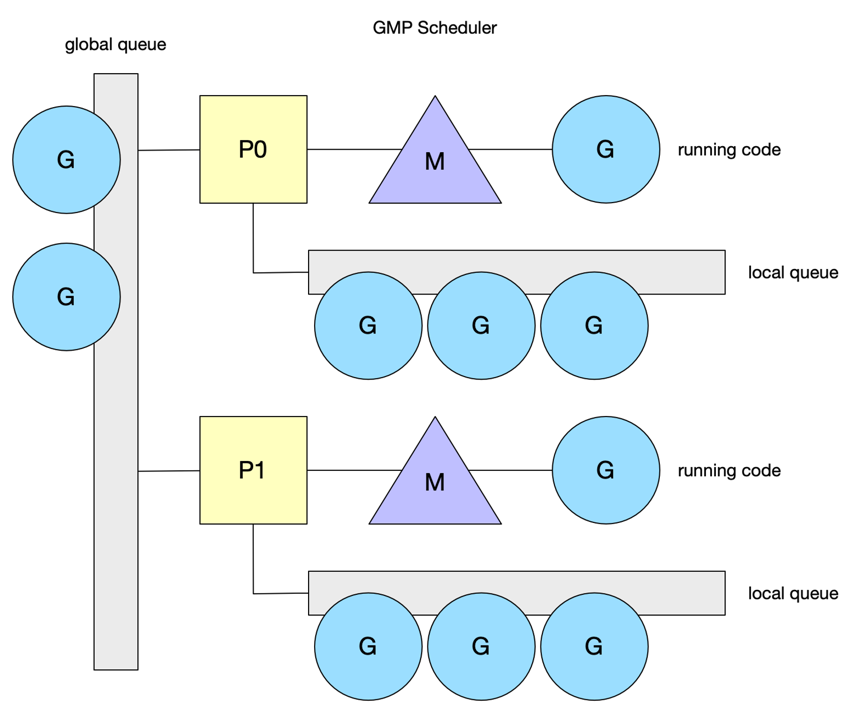

官方gmp设计文档 下面让我们来看下go schdule中相关的概念,即gmp

- g 用户态线程,代表一个goroutine,go中调度单位,主要包含当前执行的栈信息,以及当前goroutine的状态

- m 内核线程,就是被上文cfs调度的任务

- p 简单理解成队列,存放g需要被调度的资源信息,每个调度g的m都由一个p,阻塞或系统调用时间过长的m除外,会解绑p且创建新的m与该p绑定

- sched

我们先思考几个问题

- 我们有10个待执行的g,4个m如何调度,即g>m

- 我们有两个g,4个m,即g<m,则会存在m空闲

所以我们的调度器得知道所有m的状态,分配好每个m对应的g,

在go 1.0版本的时候,是多线程调度器,g存在一个全局队列,所有的m都是去全局队列拿g,这种情况可以很好的让每个m拿到对应的g,最大问题是拿g的时候锁竞争严重

在go1.11版本引入了word-stealing调度算法,上文文档有介绍该机制,引入了gmp模型,这时候每一个m对应每一个p,又引入了新问题,假如一个g长时间占用m,该m上的其它g得不到调用

在go1.12版本中引入了协作抢占试调用,1.14版本修改成了信号量抢占调用,下文我们专门讲解基于信号量的抢占式调用

大概的调度模型我们了解了,下面我们来看下m的细节,简单理解就是p有任务就拿,没有就去别的p偷,再没有就去全局偷,实在没事干就自旋,如果系统调用达到一定时间后

大概的调度模型我们了解了,下面我们来看下m的细节,简单理解就是p有任务就拿,没有就去别的p偷,再没有就去全局偷,实在没事干就自旋,如果系统调用达到一定时间后

自身的p就会被拿走找有没有自旋的m,有就给过去,没有就创建新的,下面我们来看看m的代码

2.1 gmp中的主要结构

m就是操作系统的线程,我们先看下几个重要的字段

m就是操作系统的线程,我们先看下几个重要的字段

// src/runtime/runtime2.go

type m struct {

// 用于执行调度指令的g

g0 *g // goroutine with scheduling stack

//处理signal的g

gsignal *g // signal-handling g

//线程本地存储

tls [tlsSlots]uintptr // thread-local storage (for x86 extern register)

//当前运行的g

curg *g // current running goroutine

// 用于执行 go 代码的处理器p

p puintptr // attached p for executing go code (nil if not executing go code)

//暂存的处理的

nextp puintptr

//执行系统调用之前使用线程的处理器p

oldp puintptr // the p that was attached before executing a syscall

//m没有运行work,正在寻找work即表示自身的自旋和非自旋状态

spinning bool // m is out of work and is actively looking for work

//cgo相关

ncgocall uint64 // number of cgo calls in total

ncgo int32 // number of cgo calls currently in progress

cgoCallersUse atomic.Uint32 // if non-zero, cgoCallers in use temporarily

cgoCallers *cgoCallers // cgo traceback if crashing in cgo call

//将自己与其他的 M 进行串联,即链表

alllink *m // 在 allm 上

}

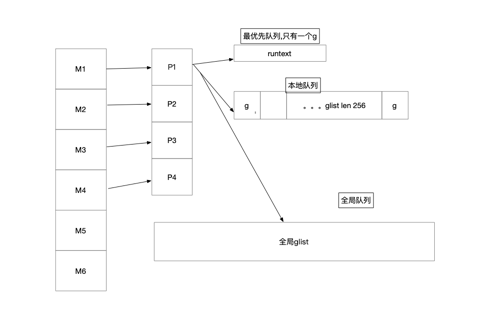

我们再来看看p的结构m,简单来说,p存在的意义是实现工作窃取(work stealing)算法,就是存放g的给m使用的本地队列, p主要存放了可被调度的goroutine,以及用于m执行的内存分配(不需要加锁)。 上有侵

// src/runtime/runtime2.go

// 其余很多字段都删减了,仅保留关键字段

type p struct {

id int32

//p的状态

status uint32 // one of pidle/prunning/...

//p链表

link puintptr

//链接到m

m muintptr // back-link to associated m (nil if idle)

//go的内存分配相关,后文我们将会详细讲解

mcache *mcache

pcache pageCache

//不同大小的可用的 defer 结构池

deferpool []*_defer // pool of available defer structs (see panic.go)

deferpoolbuf [32]*_defer

//可运行的goroutine队列,无锁访问

// Queue of runnable goroutines. Accessed without lock.

runqhead uint32

runqtail uint32

runq [256]guintptr

//简单来说就是插队的g

// runnext, if non-nil, is a runnable G that was ready'd by

// the current G and should be run next instead of what's in

// runq if there's time remaining in the running G's time

// slice. It will inherit the time left in the current time

// slice. If a set of goroutines is locked in a

// communicate-and-wait pattern, this schedules that set as a

// unit and eliminates the (potentially large) scheduling

// latency that otherwise arises from adding the ready'd

// goroutines to the end of the run queue.

//

// Note that while other P's may atomically CAS this to zero,

// only the owner P can CAS it to a valid G.

runnext guintptr

//可用的g状态链表

// Available G's (status == Gdead)

gFree struct {

gList

n int32

}

//todo 结合后面的发送数据详解

sudogcache []*sudog

sudogbuf [128]*sudog

//go内存分配的span,在内存分配文章详细讲解

// Cache of mspan objects from the heap.

mspancache struct {

// We need an explicit length here because this field is used

// in allocation codepaths where write barriers are not allowed,

// and eliminating the write barrier/keeping it eliminated from

// slice updates is tricky, more so than just managing the length

// ourselves.

len int

buf [128]*mspan

}

//优先被调度

// preempt is set to indicate that this P should be enter the

// scheduler ASAP (regardless of what G is running on it).

preempt bool

}

下面我们再简单看看g的结构

// src/runtime/runtime2.go

type g struct {

//存放栈内存 边界为[lo, hi)

//type stack struct {

// lo uintptr

// hi uintptr

//}

stack stack // offset known to runtime/cgo

stackguard0 uintptr // offset known to liblink

stackguard1 uintptr // offset known to liblink

_panic *_panic // innermost panic - offset known to liblink

_defer *_defer // innermost defer

//当前的m

m *m // current m; offset known to arm liblink

sched gobuf

//被唤醒时传递的参数

param unsafe.Pointer

atomicstatus atomic.Uint32

stackLock uint32 // sigprof/scang lock; TODO: fold in to atomicstatus

goid uint64

schedlink guintptr

//抢占信号

preempt bool // preemption signal, duplicates stackguard0 = stackpreempt

}

g主要就是定义了执行栈,以及调试等,执行的时候讲参数拷贝, 保存执行函数的入口地址

下面我们在来看看sched的结构

sched主要管理p,全局的g队列,defer池,以及m

// src/runtime/runtime2.go

type schedt struct {

lock mutex

//m相关

//自旋的m

midle muintptr // idle m's waiting for work

//自旋的m数

nmidle int32 // number of idle m's waiting for work

nmidlelocked int32 // number of locked m's waiting for work

//已创建的m和下一个mid,及序号表示mid

mnext int64 // number of m's that have been created and next M ID

maxmcount int32 // maximum number of m's allowed (or die)

nmsys int32 // number of system m's not counted for deadlock

nmfreed int64 // cumulative number of freed m's

ngsys atomic.Int32 // number of system goroutines

//空闲p链表

pidle puintptr // idle p's

//空闲p数量

npidle atomic.Int32

//自旋m的数量

nmspinning atomic.Int32 // See "Worker thread parking/unparking" comment in proc.go.

//全局队列

// Global runnable queue.

runq gQueue

runqsize int32

// Global cache of dead G's.

gFree struct {

lock mutex

stack gList // Gs with stacks //有栈的g链表

noStack gList // Gs without stacks //没栈的g链表

n int32

}

//一集缓存,上文中p结构体上有二级缓存

// Central cache of sudog structs.

sudoglock mutex

sudogcache *sudog

// Central pool of available defer structs.

deferlock mutex

deferpool *_defer

// freem is the list of m's waiting to be freed when their

// m.exited is set. Linked through m.freelink.

freem *m

}

在go启动流程中,我们了解到了schedinit,根据上文的gmp结构,我们看下关于调度器的初始化

// src/runtime/proc.go

// The bootstrap sequence is:

//

// call osinit

// call schedinit

// make & queue new G

// call runtime·mstart

//

// The new G calls runtime·main.

func schedinit() {

//m初始化

mcommoninit(gp.m, -1)

//p的初始化

if procresize(procs) != nil {

throw("unknown runnable goroutine during bootstrap")

}

}

m的初始化

// src/runtime/proc.go

func mcommoninit(mp *m, id int64) {

gp := getg()

//不是g0堆栈信息不展示给用户

// g0 stack won't make sense for user (and is not necessary unwindable).

if gp != gp.m.g0 {

callers(1, mp.createstack[:])

}

lock(&sched.lock)

if id >= 0 {

mp.id = id

} else {

//更新m的数量以及id

mp.id = mReserveID()

}

//栈相关

lo := uint32(int64Hash(uint64(mp.id), fastrandseed))

hi := uint32(int64Hash(uint64(cputicks()), ^fastrandseed))

if lo|hi == 0 {

hi = 1

}

// Same behavior as for 1.17.

// TODO: Simplify this.

if goarch.BigEndian {

mp.fastrand = uint64(lo)<<32 | uint64(hi)

} else {

mp.fastrand = uint64(hi)<<32 | uint64(lo)

}

//初始化一个新的m,子线程

mpreinit(mp)

//信号处理相关

if mp.gsignal != nil {

mp.gsignal.stackguard1 = mp.gsignal.stack.lo + stackGuard

}

// 添加到 allm 中,从而当它刚保存到寄存器或本地线程存储时候 GC 不会释放 g.m

// Add to allm so garbage collector doesn't free g->m

// when it is just in a register or thread-local storage.

mp.alllink = allm

// NumCgoCall() iterates over allm w/o schedlock,

// so we need to publish it safely.

atomicstorep(unsafe.Pointer(&allm), unsafe.Pointer(mp))

unlock(&sched.lock)

//cgo相关

// Allocate memory to hold a cgo traceback if the cgo call crashes.

if iscgo || GOOS == "solaris" || GOOS == "illumos" || GOOS == "windows" {

mp.cgoCallers = new(cgoCallers)

}

}

p的初始化

简单的描述下干了哪些事

- 按找nprocs的数量调整allp的大小(runtime.GOMAXPROCS()),并且初始化部分p

- 如果当前p里还有g,没有被移除,讲p状态设置未_Prunning,否则将第一个p给当前的m绑定

- 从allp移除不需要的p,将释放的p队列的任务扔进全局队列

- 最后挨个检查p,将没有任务的p放入idle队列,并将初当前p且没有任务的p连成链表 p的状态分别为_Pidle,_Prunning,_Psyscall,_Pgcstop,_Pdead

- _Pidle 未运行g的p

- _Prunning 已经被m绑定

- _Psyscall 没有运行用户代码,与系统调用的m解绑后的状态

- _Pgcstop P因gc STW 而停止

- _Pdead GOMAXPROCS收缩不需要这个p了,逻辑我们下文有讲

func procresize(nprocs int32) *p {

//获取p的个数

old := gomaxprocs

if old < 0 || nprocs <= 0 {

throw("procresize: invalid arg")

}

if traceEnabled() {

traceGomaxprocs(nprocs)

}

//更新统计信息,记录此次修改gomaxprocs的时间

// update statistics

now := nanotime()

if sched.procresizetime != 0 {

sched.totaltime += int64(old) * (now - sched.procresizetime)

}

sched.procresizetime = now

maskWords := (nprocs + 31) / 32

// 必要时增加 allp

// 这个时候本质上是在检查用户代码是否有调用过 runtime.MAXGOPROCS 调整 p 的数量

// 此处多一步检查是为了避免内部的锁,如果 nprocs 明显小于 allp 的可见数量(因为 len)

// 则不需要进行加锁

// Grow allp if necessary.

if nprocs > int32(len(allp)) {

//与 retake 同步,它可以同时运行,因为它不在 P 上运行

// Synchronize with retake, which could be running

// concurrently since it doesn't run on a P.

lock(&allpLock)

if nprocs <= int32(cap(allp)) {

//nprocs变小,则只保留allp数组里0-nprocs个p

allp = allp[:nprocs]

} else {

//调大创建新的p

nallp := make([]*p, nprocs)

//保证旧p不被丢弃

// Copy everything up to allp's cap so we

// never lose old allocated Ps.

copy(nallp, allp[:cap(allp)])

allp = nallp

}

if maskWords <= int32(cap(idlepMask)) {

idlepMask = idlepMask[:maskWords]

timerpMask = timerpMask[:maskWords]

} else {

nidlepMask := make([]uint32, maskWords)

// No need to copy beyond len, old Ps are irrelevant.

copy(nidlepMask, idlepMask)

idlepMask = nidlepMask

ntimerpMask := make([]uint32, maskWords)

copy(ntimerpMask, timerpMask)

timerpMask = ntimerpMask

}

unlock(&allpLock)

}

//初始化新的p

// initialize new P's

for i := old; i < nprocs; i++ {

pp := allp[i]

if pp == nil {

pp = new(p)

}

//具体初始化流程 我们会在下文讲解

pp.init(i)

atomicstorep(unsafe.Pointer(&allp[i]), unsafe.Pointer(pp))

}

gp := getg()

//如果当前的p的id(第一个p id = 1)大于调整后的p的数量,一般指处理器数量

if gp.m.p != 0 && gp.m.p.ptr().id < nprocs {

// continue to use the current P

gp.m.p.ptr().status = _Prunning

gp.m.p.ptr().mcache.prepareForSweep()

} else {

// release the current P and acquire allp[0].

//

// We must do this before destroying our current P

// because p.destroy itself has write barriers, so we

// need to do that from a valid P.

if gp.m.p != 0 {

if traceEnabled() {

// Pretend that we were descheduled

// and then scheduled again to keep

// the trace sane.

traceGoSched()

traceProcStop(gp.m.p.ptr())

}

gp.m.p.ptr().m = 0

}

//切换到p0执行

gp.m.p = 0

pp := allp[0]

pp.m = 0

pp.status = _Pidle

//将p0绑定到当前m

acquirep(pp)

if traceEnabled() {

traceGoStart()

}

}

//gmp已经被设置,不需要mcache0引导

// g.m.p is now set, so we no longer need mcache0 for bootstrapping.

mcache0 = nil

//释放未使用的p资源

// release resources from unused P's

for i := nprocs; i < old; i++ {

pp := allp[i]

pp.destroy()

// can't free P itself because it can be referenced by an M in syscall

}

// Trim allp.

if int32(len(allp)) != nprocs {

lock(&allpLock)

allp = allp[:nprocs]

idlepMask = idlepMask[:maskWords]

timerpMask = timerpMask[:maskWords]

unlock(&allpLock)

}

var runnablePs *p

for i := nprocs - 1; i >= 0; i-- {

pp := allp[i]

if gp.m.p.ptr() == pp {

continue

}

pp.status = _Pidle

//在本地队列里没有g

if runqempty(pp) {

//放入_Pidle队列里

pidleput(pp, now)

} else {

//p队列里有g,代表有任务,绑定一个m

pp.m.set(mget())

//构建可运行的p链表

pp.link.set(runnablePs)

runnablePs = pp

}

}

stealOrder.reset(uint32(nprocs))

var int32p *int32 = &gomaxprocs // make compiler check that gomaxprocs is an int32

atomic.Store((*uint32)(unsafe.Pointer(int32p)), uint32(nprocs))

if old != nprocs {

//通知限制器p数量改了

// Notify the limiter that the amount of procs has changed.

gcCPULimiter.resetCapacity(now, nprocs)

}

//返回所有包含本地任务的链表

return runnablePs

}

//初始化p,新创建或者之前被销毁的p

// init initializes pp, which may be a freshly allocated p or a

// previously destroyed p, and transitions it to status _Pgcstop.

func (pp *p) init(id int32) {

// p 的 id 就是它在 allp 中的索引

pp.id = id

// 新创建的 p 处于 _Pgcstop 状态

pp.status = _Pgcstop

pp.sudogcache = pp.sudogbuf[:0]

pp.deferpool = pp.deferpoolbuf[:0]

pp.wbBuf.reset()

// 为 P 分配 cache 对象

if pp.mcache == nil {

//id == 0 说明这是引导阶段初始化第一个 p

if id == 0 {

if mcache0 == nil {

throw("missing mcache?")

}

// Use the bootstrap mcache0. Only one P will get

// mcache0: the one with ID 0.

pp.mcache = mcache0

} else {

pp.mcache = allocmcache()

}

}

if raceenabled && pp.raceprocctx == 0 {

if id == 0 {

pp.raceprocctx = raceprocctx0

raceprocctx0 = 0 // bootstrap

} else {

pp.raceprocctx = raceproccreate()

}

}

lockInit(&pp.timersLock, lockRankTimers)

// This P may get timers when it starts running. Set the mask here

// since the P may not go through pidleget (notably P 0 on startup).

timerpMask.set(id)

// Similarly, we may not go through pidleget before this P starts

// running if it is P 0 on startup.

idlepMask.clear(id)

}

g 的初始化

g的状态如下,不需要记住,大概有印象就行了,不清楚的时候可以查到

- _Gidle 表示这个 goroutine 刚刚分配,还没有初始化

- _Grunnable 表示这个 goroutine 在运行队列中。它当前不在执行用户代码。堆栈不被拥有。

- _Grunning 意味着这个 goroutine 可以执行用户代码。堆栈由这个 goroutine 拥有。它不在运行队列中。它被分配了一个 M 和一个 P(g.m 和 g.m.p 是有效的)

- _Gsyscall 表示这个 goroutine 正在执行一个系统调用。它不执行用户代码。堆栈由这个 goroutine 拥有。它不在运行队列中。它被分配了一个 m

- _Gwaiting 表示这个 goroutine 在运行时被阻塞

- _Gmoribund_unused 暂未使用

- _Gdead 这个 goroutine当前未被使用。它可能刚刚退出,处于空闲列表中,或者刚刚被初始化。它不执行用户代码。它可能有也可能没有分配堆栈。 G 及其堆栈(如果有)由退出G或从空闲列表中获得G的M所有。

- _Genqueue_unused 暂未使用

- _Gcopystack 意味着这个 goroutine 的堆栈正在被移动

- _Gpreempted 意味着这个 goroutine 停止了自己以进行 suspendG 抢占

//gc相关的g状态,放到后面章节讲解

_Gscan = 0x1000

_Gscanrunnable = _Gscan + _Grunnable // 0x1001

_Gscanrunning = _Gscan + _Grunning // 0x1002

_Gscansyscall = _Gscan + _Gsyscall // 0x1003

_Gscanwaiting = _Gscan + _Gwaiting // 0x1004

_Gscanpreempted = _Gscan + _Gpreempted // 0x1009

启动go的方法为runtime.newproc,整体流程如下

- 调用runtime.newproc1

- 通过gfget从p.gFree上获取一个g,如果p.gFree为空,则通过sched.gFree.stack.pop()从全局队列捞一批,当p.gFree=32||sched.gFree=nil中止

- 如果没有获取到,通过malg与allgadd分配一个新的g简称newg,然后把newg放入p的runnext上

- 若runnext为空,则直接分配,若runnext不为空,则把runnext上存在的旧的g简称oldg放入p.runq即p的本地队列末尾,若runq满了

- 调用runqputslow将p.runq上的g分配一半到全局队列,同时将oldg添加到末尾

+---------------------+

| go func |

+---------------------+

|

|

v

+---------------------+

| runtime.newproc1 |

+---------------------+

|

|

v

+---------------------+

| malg && allgadd |

+---------------------+

|

|

v

+--------------+ +-------------------------------+

| wakep | <---------- | runqput |

+--------------+ +-------------------------------+

| ^ ^

| | |

v | |

+--------------+ runnext空 +---------------------+ | |

| pp.runq=newg | <---------- | runnext | | |

+--------------+ +---------------------+ | |

| | | |

| | runnext非空 | |

| v | |

| +---------------------+ | |

| | pp.runnext=newg | | |

| +---------------------+ | |

| | | |

| | | |

| v | |

| +---------------------+ | |

| | put oldg to pp.runq | | |

| +---------------------+ | |

| | | |

| | pp.runq full | |

| v | |

| +---------------------+ | |

| | runqputslow | -+ |

| +---------------------+ |

| |

+--------------------------------------------------------+

// src/runtime/proc.go

// Create a new g running fn.

// Put it on the queue of g's waiting to run.

// The compiler turns a go statement into a call to this.

func newproc(fn *funcval) {

//很熟悉的方法了,获取一个g

gp := getg()

//获取pc/ip寄存器的值

pc := getcallerpc()

systemstack(func() {

//创建一个新的 g,状态为_Grunnable

newg := newproc1(fn, gp, pc)

//pp即我们上文中的p,该m上绑定的p

pp := getg().m.p.ptr()

//runqput 尝试将 g 放入本地可运行队列。如果 next 为假,runqput 将

//g 添加到可运行队列的尾部。如果 next 为真,则 runqput 将 g 放入 pp.runnext 槽中

//如果运行队列已满,则 runnext 将 g 放入全局队列。仅由所有者 P 执行。

//我们回忆下,pp.runnext是插队的g,将在下一个调用

runqput(pp, newg, true)

//todo 暂时理解不够深刻,后续二刷

//如果主m已经启动,尝试再添加一个 P 来执行 G

if mainStarted {

wakep()

}

})

}

下面我们再来看下newproc1(),即创建一个新的g

// Create a new g in state _Grunnable, starting at fn. callerpc is the

// address of the go statement that created this. The caller is responsible

// for adding the new g to the scheduler.

func newproc1(fn *funcval, callergp *g, callerpc uintptr) *g {

if fn == nil {

fatal("go of nil func value")

}

mp := acquirem() // disable preemption because we hold M and P in local vars.

//获取当前m上绑定的p

pp := mp.p.ptr()

//根据p获取一个g,从g上的gFree取,如果没有,从全局捞一批回来(从sched.gFree里取,直到sched.gFree没有或者pp.gFree里>=32为止)

newg := gfget(pp)

//如果没取到g(初始化是没有g的)

if newg == nil {

// stackMin = 2048

//创建2k的栈

newg = malg(stackMin)

//将新创建的g从_Gidle更新为_Gdead

casgstatus(newg, _Gidle, _Gdead)

//将_Gdead的栈添加到allg,gc不会扫描未初始化的栈

allgadd(newg) // publishes with a g->status of Gdead so GC scanner doesn't look at uninitialized stack.

}

//省略了部分各种赋值堆栈指针给调度sched,以及g

//由_Gidle变成了_Gdead变成了_Grunnable

//分配好寄存器啥的,将g从_Gdead变成_Grunnable

casgstatus(newg, _Gdead, _Grunnable)

//分配go id

newg.goid = pp.goidcache

//用于下一次go分配

pp.goidcache++

if raceenabled {

//分配data race的ctx

newg.racectx = racegostart(callerpc)

if newg.labels != nil {

// See note in proflabel.go on labelSync's role in synchronizing

// with the reads in the signal handler.

racereleasemergeg(newg, unsafe.Pointer(&labelSync))

}

}

if traceEnabled() {

traceGoCreate(newg, newg.startpc)

}

//恢复抢占请求

releasem(mp)

return newg

}

func runqput(pp *p, gp *g, next bool) {

//true

if next {

//将newg分配到runnext,直到成功为止

retryNext:

oldnext := pp.runnext

if !pp.runnext.cas(oldnext, guintptr(unsafe.Pointer(gp))) {

goto retryNext

}

if oldnext == 0 {

return

}

// Kick the old runnext out to the regular run queue.

gp = oldnext.ptr()

}

retry:

h := atomic.LoadAcq(&pp.runqhead) // load-acquire, synchronize with consumers

t := pp.runqtail

//如果本地队列没满的情况,将oldg分配到p的本地队列

if t-h < uint32(len(pp.runq)) {

pp.runq[t%uint32(len(pp.runq))].set(gp)

atomic.StoreRel(&pp.runqtail, t+1) // store-release, makes the item available for consumption

return

}

//本地p上的glist满了,将oldg放入全局glist

if runqputslow(pp, gp, h, t) {

return

}

// the queue is not full, now the put above must succeed

goto retry

}

// Put g and a batch of work from local runnable queue on global queue.

// Executed only by the owner P.

//将p上的glist一次取1/2放入全局glist,同时将gp插入末尾

func runqputslow(pp *p, gp *g, h, t uint32) bool {

var batch [len(pp.runq)/2 + 1]*g

// First, grab a batch from local queue.

n := t - h

n = n / 2

if n != uint32(len(pp.runq)/2) {

throw("runqputslow: queue is not full")

}

for i := uint32(0); i < n; i++ {

batch[i] = pp.runq[(h+i)%uint32(len(pp.runq))].ptr()

}

if !atomic.CasRel(&pp.runqhead, h, h+n) { // cas-release, commits consume

return false

}

batch[n] = gp

if randomizeScheduler {

for i := uint32(1); i <= n; i++ {

j := fastrandn(i + 1)

batch[i], batch[j] = batch[j], batch[i]

}

}

// Link the goroutines.

for i := uint32(0); i < n; i++ {

batch[i].schedlink.set(batch[i+1])

}

var q gQueue

q.head.set(batch[0])

q.tail.set(batch[n])

// Now put the batch on global queue.

lock(&sched.lock)

globrunqputbatch(&q, int32(n+1))

unlock(&sched.lock)

return true

}

我们来总结下创建g的过程

- 尝试从本地p获取已经执行过的g,本地p为0则一次从全局队列捞32个,再返回p

- 如果拿不到g(初始化没有),则创建一个g,并分配线程执行栈,g处于_Gidle状态

- 创建完成后,g变成了 _Gdead,然后执行函数的入口地址跟参数, 初始化sp,等等存储一份,调用后并存储sched并将状态变为_Grunnable

- 给新创建的goroutine分配id,并将g塞入本地队列或者插入p.runnext(插队),满了则放入p.runq末尾,p.runq也满了则同时将p的runq通过runqputslow函数

- 分一半放入全局队列

schedule消费端

上一节我们大概了解下生产端的逻辑,我们再来一起看下消费端逻辑,大概逻辑如下图所示,下面我们一步一步看下每个流程的具体代码

+ - - - - - - - - - - -+ +- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -+

' schedule: ' ' top: '

' ' ' '

' +------------------+ ' +-----------------+ ' +--------------+ +----------------+ +-------------+ +---------+ +-----------+ ' +----+

' | findrunnable | ' --> | top | --> ' | wakefing | --> | runqget1 | --> | globrunqget | --> | netpoll | --> | runqsteal | ' --> | gc |

' +------------------+ ' +-----------------+ ' +--------------+ +----------------+ +-------------+ +---------+ +-----------+ ' +----+

' ' ' '

' ' +- - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - -+

' +------------------+ ' +-----------------+ +--------------+ +----------------+

' | schedule | ' --> | runtime.execute | --> | runtime.gogo | --> | runtime.goexit |

' +------------------+ ' +-----------------+ +--------------+ +----------------+

' +------------------+ '

' | globrunqget/61 | '

' +------------------+ '

' +------------------+ '

' | runnableGcWorker | '

' +------------------+ '

' +------------------+ '

' | runqget | '

' +------------------+ '

' '

+ - - - - - - - - - - -+

schedule()主函数

1 通过findrunnable()来获取一个g,下文详细根据代码讲解

2 通过execute(gp, inheritTime)将g运行在当前m上

3 gogo(&gp.sched) //通过汇编实现的,具体

4 goexit()执行完毕后,重新回到schedule()

//已删除多余部分代码

func schedule() {

//获取m

mp := getg().m

top:

//省略判断该go是否可被调度的代码

pp := mp.p.ptr()

//获取一个可被调度的g

gp, inheritTime, tryWakeP := findRunnable() // blocks until work is available